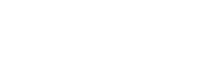

Generative AI, with its ability to create new content, is revolutionizing various industries. According to a recent market report, the global market for generative AI is expected to grow from USD 20.9 billion in 2024 to USD 136.7 billion by 2030.

This significant growth is driven by the increasing adoption of generative AI in various sectors, including healthcare, finance, marketing, and education. At the heart of these applications are foundation models, pre-trained best large language models (LLMs) that serve as the backbone of generative AI systems. Selecting the right foundation model for your app is crucial to its success.

This blog post will guide you through the process, discussing key factors to consider and best practices.

Understanding Foundation Models

Foundation models are trained on massive datasets of text and code, enabling them to understand and generate human-like text, translate languages, write different kinds of creative content, and answer your questions in an informative way. They are the building blocks of generative AI applications, providing the intelligence and capabilities needed to perform various tasks.

Some of the most popular foundation models include:

- OpenAI's GPT-3 series: Known for its impressive language generation capabilities, GPT-3 is one of the most popular foundation models. It is capable of generating a wide range of text formats, including articles, poems, scripts, code, musical pieces, email, letters, etc.

- Google's BERT: A bidirectional encoder representations from transformers, BERT is particularly effective for understanding a sentence's context. It has been used for a variety of tasks, including question-answering, text summarization, and sentiment analysis.

- Meta AI’s Open Pre-trained Transformer:- Meta AI’s Open Pre-trained Transformer (OPT) is a large-scale open-source language model that offers developers a free and accessible option for AI development. It is similar in size and capabilities to GPT-3, but its open-source nature means that developers can customize and modify it as needed.

- Amazon's AlexaTM Language Model (ALM): Designed for conversational AI, ALM is well-suited for tasks such as question answering and dialogue generation. It is used in Amazon's Alexa devices and other products.

- Other Notable Models: Other popular foundation models include Jurassic-1, PaLM, and BLOOM.

Key Factors to Consider When Choosing a Foundation Model

The choice of a foundation model for your generative AI application can significantly impact its performance, cost, and overall success. Here are some key factors to consider when making your selection:

1.Task Requirements

- Nature of the Task: Determine the specific tasks your app will perform. Different models excel at different functions, such as text generation, translation, summarization, or question answering.

For example, if your app needs to generate creative writing prompts, a model like GPT-3 is a good choice. If your app needs to translate text from one language to another, a model like Google Translate might be more suitable.

- Data Type: Consider the type of data your app will process. Some models are better suited for text-based tasks, while others can handle images, audio, or code. For example, if your app needs to generate image captions, a model like CLIP might be a good choice. If your app needs to create code, a model like Codex might be more suitable.

- Level of Complexity: Assess the complexity of the tasks your app needs to accomplish. More complex tasks may require more powerful foundation models. For example, if your app needs to generate highly creative and nuanced text, a GPT Model, like GPT-4, is necessary.

2. Model Size and Architecture

- Model Size: Larger models typically have more excellent capabilities but also require more computational resources. Evaluate your app's resource constraints to determine the optimal model size.

For example, if you have limited computational resources, you should choose a smaller model like GPT-NeoX.

- Architecture: Different architectures, such as Transformer or Recurrent Neural Networks (RNNs), have their strengths and weaknesses. Choose an architecture that aligns with your task requirements and computational resources.

For example, Transformer models are generally better suited for tasks that require understanding long-range dependencies, while RNNs are better suited for assignments that involve sequential data.

3. Data Quality and Quantity

- Data Quality: Ensure that the data used to train the foundation model is high-quality, relevant, and representative of the tasks your app will perform.

For example, if your app needs to generate text about a specific topic, the model should be trained on a large dataset of text related to that topic.

- Data Quantity: The amount of data used for training significantly impacts the model's performance. Larger datasets can lead to more robust and accurate models.

For example, models like GPT-3 and Open Pre-trained Transformer-175B were trained on massive datasets of text and code, which is why they are so powerful.

4. Domain Specificity

- General-Purpose vs. Domain-Specific Models: Decide whether a general-purpose foundation model or a domain-specific model is more suitable for your app. Domain-specific models are trained on data from a particular domain, such as healthcare or finance, and can provide more specialized capabilities.

For example, if your app needs to generate medical diagnoses, a domain-specific model trained on medical data might be more appropriate.

5. Computational Resources

- Hardware Requirements: Assess the foundation model's hardware requirements, including the necessary GPUs or TPUs. When making a decision, consider your budget and infrastructure limitations.

For example, larger models like GPT-3 and Open Pre-trained Transformer-175B require powerful GPUs or TPUs to run efficiently.

- Inference Costs: Factor in the costs associated with running the model for inference, which involves using the model to generate new content.

For example, if you plan to use a large foundation model for a high-traffic app, the inference costs could be significant.

6. Licensing and Costs

- Open-Source vs. Proprietary Models: Determine whether an open-source or proprietary model is more appropriate for your needs. Open-source models offer flexibility but may require additional customization and maintenance. Proprietary models often come with commercial licenses and support.

For example, GPT-3 is a proprietary model, while Open Pre-trained Transformer-175B is an open-source model.

- Licensing Costs: Evaluate the licensing costs associated with using different foundation models. Consider factors such as usage limits, commercialization rights, and support services.

For example, some foundation models have usage limits or require commercial licenses for specific applications.

7. Fine-Tuning and Customization

- Fine-Tuning: Assess the need to fine-tune the foundation model to adapt it to your specific use case. Fine-tuning involves training the model on a smaller dataset tailored to your task.

For example, if you want to use a foundation model to generate text in a specific style or tone, you should fine-tune it on a dataset of text in that style or tone.

- Customization: Consider the level of customization required to achieve the desired performance. Some models offer more flexibility for customization than others.

For example, some models allow you to add custom modules or modify the architecture to suit your specific needs.

8. Additional Considerations

- Ethical Implications: Be aware of the ethical implications of using foundation models. Consider factors such as bias, fairness, and privacy.

- Model Safety: Evaluate the model's safety and reliability. Consider factors such as the risk of generating harmful or offensive content.

- Model Evolution: Keep up-to-date with the latest developments in foundation models. New models and techniques are constantly emerging, so it's essential to stay informed.

- Community Support: Consider the availability of community support and resources for the foundation model you choose. This can be helpful for troubleshooting and learning best practices.

By carefully considering these factors, you can choose the foundation model that best suits your generative AI application and achieve your desired outcomes.

Best Practices for Choosing a Foundation Model

Selecting the right foundation model for your generative AI application is crucial to its success. Here are some best practices to follow:

- Start with a Smaller Model: Before scaling up, begin with a smaller foundation model to evaluate its performance and identify potential issues. This can help you avoid wasting resources on a model that is not suitable for your task.

- Experiment with Different Models: Try different models to find the one that best suits your needs. This will help you identify the model that provides the best performance for your specific task.

- Consider Hybrid Approaches: Explore hybrid approaches that combine multiple foundation models or incorporate additional techniques to enhance performance. For example, you might combine a foundation model with a rule-based system to improve the quality of the generated output.

- Evaluate Performance Metrics: Use appropriate performance metrics to assess the model's accuracy, relevance, and coherence. For example, you might use metrics like BLEU, ROUGE, or METEOR to evaluate the quality of the generated text.

- Continuously Monitor and Improve: Regularly monitor the model's performance and make necessary adjustments to ensure it remains effective. For example, you might need to fine-tune the model or update the training data as your app evolves.

By following these best practices, you can likely select the right foundation model for your generative AI application and achieve your desired outcomes.

VLink: Your Partner in Choosing the Perfect Foundation Model

At VLink, we specialize in helping businesses harness the power of generative AI to achieve their goals. Our team of experienced data scientists and skilled developers can help you select the most appropriate foundation model for your specific application.

Here's how we can help:

- Needs Assessment: We will work with you to understand your business objectives and identify the specific tasks that you want your generative AI app to perform.

- Model Selection: Our experts will carefully evaluate your requirements and recommend the foundation model that best suits your needs. We will consider factors such as task complexity, data type, computational resources, and licensing costs.

- Model Evaluation: We will conduct thorough evaluations to assess the performance of different foundation models on your specific tasks. This will help us identify the most suitable option for your application.

- Customization: If necessary, we can customize the foundation model to better align with your specific requirements. This might involve fine-tuning the model on your dataset or incorporating additional techniques.

- Deployment and Integration: We will deploy the chosen foundation model into your generative AI application and integrate it with your existing systems.

- Ongoing Support: We will provide ongoing support and maintenance to ensure that your generative AI app continues to perform at its best.

Connect with us today to learn more about how VLink can help you choose the ideal foundation model for your generative AI app.

Shivisha Patel

Shivisha Patel