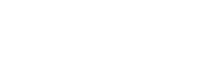

Financial crime in the Gulf region has reached an inflection point. With cyberattacks increasing 30% year-over-year across the GCC and digital banking adoption surpassing 75% of consumers, traditional fraud prevention has become fundamentally inadequate. The sophistication of modern fraud schemes - from synthetic identity fraud to AI-generated deepfakes - has outpaced the capabilities of legacy rule-based systems that most regional banks still rely upon.

The numbers paint a stark picture: organizations globally lose approximately 5% of annual revenue to fraud, with median losses per case reaching $145,000. In the Middle East, where corruption cases often involve higher-value transactions, these figures escalate significantly. Meanwhile, 70% of regional executives acknowledge that generative AI has amplified their cyber risk exposure - compared to just 55% globally. The asymmetry is clear: criminals are leveraging advanced AI capabilities while many financial institutions remain anchored to detection methodologies designed for a pre-digital era.

This analysis examines what AI-powered fraud detection actually delivers for GCC financial institutions: measurable outcomes, implementation frameworks, and the strategic considerations that separate successful deployments from expensive failures. Whether your organization is evaluating initial AI adoption or optimizing existing deployments, this guide provides the technical and business context required for informed decision-making.

Why Fraud in GCC Finance Is Spiking - And Why AI Has Become Mandatory

The fraud landscape in GCC financial services has transformed dramatically in recent years. Digital acceleration, while essential for competitive positioning and customer experience, has simultaneously expanded the attack surface available to sophisticated criminal enterprises. The region's rapid adoption of digital payment systems, open banking initiatives, and mobile-first financial services has created new vulnerabilities that traditional fraud detection cannot adequately address.

The AI-powered fraud detection market in the GCC is projected to reach $1.2 billion by 2025, driven by three converging factors: regulatory mandates requiring advanced detection capabilities, the exponential growth in digital transaction volumes, and the increasing sophistication of fraud attacks that render rule-based systems obsolete. Financial institutions across the UAE and Saudi Arabia are recognizing that legacy approaches - characterized by static rules, batch processing, and high false positive rates - cannot scale to meet these challenges.

The Compliance-First Leap: CBUAE Notice 3057 and SAMA Requirements

Regulatory frameworks in the GCC have evolved from permissive guidance to prescriptive requirements. CBUAE Notice 3057 establishes specific mandates for transaction monitoring, suspicious activity reporting, and customer due diligence that effectively require real-time AI capabilities. The regulation emphasizes risk-based approaches that dynamically adjust scrutiny based on customer behavior profiles - a capability that static rule systems cannot provide.

SAMA's governance framework adds another dimension: explainability. Saudi regulators require that AI-driven decisions, particularly those affecting customer transactions, must be auditable and explainable. This means black-box models, regardless of their predictive accuracy, are insufficient for compliance. Banks must demonstrate clear reasoning chains for fraud decisions - a requirement that has significant implications for model architecture and vendor selection. Organizations leveraging machine learning development services must prioritize explainable AI architectures from the outset.

The Personal Data Protection Law (PDPL) in Saudi Arabia and similar frameworks across the GCC introduce data sovereignty requirements that affect AI deployment architecture. Models must be trained and operated within approved jurisdictions, with clear data residency controls. This has accelerated adoption of regional cloud deployments from providers like Oracle (Saudi Arabia), Microsoft Azure (UAE), and AWS (Bahrain) that offer compliant infrastructure.

The Shift From Rules-Based to Behavioral AI: What Has Changed

Traditional fraud detection relies on predetermined rules: flag transactions above threshold X, block IP addresses from country Y, require additional verification for transactions at time Z. These rules, while straightforward to implement and audit, suffer from fundamental limitations in modern fraud environments. Criminals quickly learn rule thresholds and structure activities to evade detection. Legitimate customers experience friction when their behavior accidentally triggers rules. And the maintenance burden of keeping rules current across evolving fraud patterns consumes significant operational resources.

Why Rule-Based Systems Consistently Fail in High-Volume Environments

Consider a typical scenario: a VIP banking customer travels internationally for business and makes purchases in multiple countries within hours. A rule-based system, detecting geographic anomalies, blocks the transactions and requires manual verification. The customer experiences friction and frustration. The bank's fraud team spends time investigating a legitimate transaction. Meanwhile, a sophisticated criminal using synthetic identity credentials that conform to expected patterns completes fraudulent transactions undetected.

This pattern - high false positives for legitimate customers, missed detections for sophisticated fraud - represents the core failure mode of rule-based approaches. Industry data indicates that traditional systems generate false positive rates exceeding 90% in some implementations, meaning fraud analysts spend the vast majority of their time investigating legitimate transactions rather than actual criminal activity. The operational cost is significant; the customer experience impact is equally damaging.

Rules-Based vs AI-Powered Fraud Detection Comparison

| Capability | Rules-Based Systems | AI-Powered Detection |

| Detection Speed | Batch processing (minutes to hours) | Real-time (<100ms decisioning) |

| False Positive Rate | High (often >90%) | Low (<5% with behavioral models) |

| Novel Fraud Detection | Cannot detect unknown patterns | Identifies anomalies in real-time |

| Adaptation Speed | Manual updates (weeks to months) | Continuous learning (daily/weekly) |

| Regulatory Compliance | Limited audit trails | Explainable AI with full audit logs |

How Behavioral Biometrics and Device Intelligence Transform Detection

Modern AI-powered fraud detection operates on an entirely different paradigm. Rather than evaluating transactions against static rules, behavioral AI builds dynamic profiles of how individual customers interact with financial services. This includes typing patterns and keystroke dynamics, mouse movement characteristics, device handling (accelerometer data on mobile), navigation patterns within applications, and transaction timing and velocity patterns.

These behavioral signatures are extraordinarily difficult to replicate. Even if a criminal obtains valid credentials, their behavioral patterns will differ from the legitimate account holder. Advanced implementations can detect Remote Access Trojan (RAT) attacks, where criminals remotely control victim devices, by identifying the characteristic latency and interaction patterns that differ from direct device usage. The system delivers sub-100ms decisioning while maintaining detection accuracy that far exceeds rule-based alternatives. Banks implementing conversational AI development services are increasingly integrating fraud detection capabilities into customer-facing chatbots for immediate alert verification.

Real Enterprise Results: What GCC Banks Are Achieving Today

The theoretical benefits of AI-powered fraud detection translate into measurable operational and financial outcomes. The following case studies represent documented implementations, though specific institution names are withheld for confidentiality. These results illustrate what is achievable with properly architected and implemented AI fraud detection systems in the GCC context.

Case Study 1: UAE Tier-1 Bank Achieves CBUAE Notice 3057 Compliance

A leading UAE retail bank faced dual challenges: achieving compliance with CBUAE Notice 3057 requirements while reducing the customer friction generated by their legacy fraud detection system. Their existing rule-based approach generated excessive false positives, requiring manual review of thousands of legitimate transactions daily while still missing sophisticated fraud attacks.

The implementation deployed behavioral biometrics across digital channels, integrated device intelligence for risk scoring, and established explainable AI models that met CBUAE audit requirements. Results achieved: 90% reduction in customer authentication friction, 99% prevention rate for account takeover attacks, full compliance with Notice 3057 real-time monitoring requirements, and a 60% reduction in fraud investigation workload. The bank's fraud operations team, previously overwhelmed with false positive investigations, redirected capacity toward proactive threat hunting and customer experience optimization.

Case Study 2: Saudi Financial Institution Prevents Procurement Fraud

A Saudi Arabia-based financial services company, aligned with Vision 2030 digital transformation initiatives, identified procurement fraud as a significant risk area. Traditional controls had failed to detect a scheme involving collusion between internal staff and external vendors. The organization implemented AI-powered anomaly detection across procurement workflows, analyzing patterns in vendor relationships, invoice timing, approval sequences, and payment distributions.

The AI system identified statistical anomalies that rule-based controls had missed: unusual concentration of contracts with specific vendors, invoice amounts clustered just below approval thresholds, and timing patterns suggesting coordinated submission. Implementation of enterprise cloud consulting services enabled secure deployment within Saudi data residency requirements. The result: detection and prevention of fraud that had been ongoing for multiple years, recovery of significant previously lost funds, and establishment of continuous monitoring that prevents similar schemes.

Case Study 3: Regional Bank Implements FRAML Convergence

A mid-sized regional bank operating across multiple GCC jurisdictions recognized that their separate fraud and AML operations created inefficiencies and blind spots. Suspicious activity that indicated fraud might also indicate money laundering, but siloed systems meant that analysts in each function were duplicating investigations or missing connections entirely.

The bank implemented a unified FRAML (Fraud and Anti-Money Laundering) platform powered by AI that consolidated transaction monitoring, alert generation, and case management. Shared behavioral profiles meant that patterns identified by fraud detection enriched AML analysis and vice versa. The results: 50% reduction in investigation time through elimination of duplicate efforts, improved detection of complex financial crime that spans both fraud and money laundering typologies and streamlined regulatory reporting that satisfied requirements for both CBUAE fraud and AML frameworks.

The CBUAE-Ready AI Fraud Detection Framework (4 Phases)

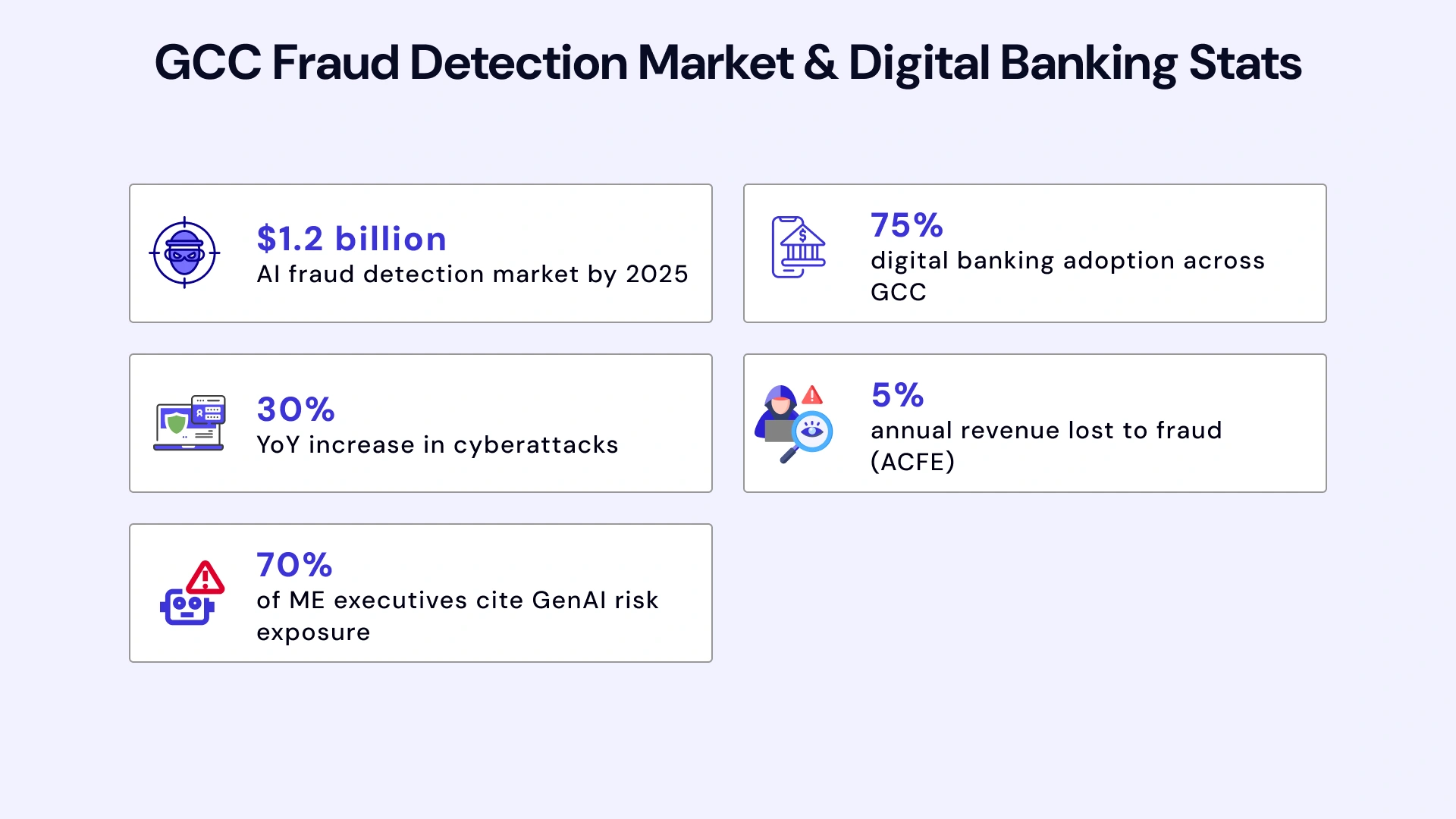

Successful AI fraud detection implementation requires a structured approach that addresses technical, operational, and regulatory dimensions. This framework, developed through multiple GCC deployments, provides a roadmap for organizations seeking to achieve both operational excellence and regulatory compliance. Each phase builds upon the previous, creating a sustainable foundation for continuous improvement.

Phase 1: Data Foundation and Sovereignty Architecture

Before any AI model can be deployed, organizations must establish compliant data infrastructure. This includes data residency compliance ensuring all personal and transaction data remains within approved jurisdictions per PDPL and CBUAE requirements. For Saudi implementations, this typically means Oracle Cloud Saudi Arabia or Microsoft Azure UAE with data mirroring. UAE implementations commonly leverage AWS Bahrain or Azure UAE regions. The cloud infrastructure services selected must demonstrate certified compliance with regional data protection requirements.

Data integration represents the second critical component. AI fraud detection requires access to transaction data, customer profiles, device telemetry, and behavioral signals. Legacy core banking systems often lack APIs for real-time data access. Phase 1 establishes the data pipelines and integration architecture required to feed AI models with the comprehensive data they require for accurate detection.

Phase 2: Behavioral Intelligence Layer Implementation

With data infrastructure established, Phase 2 deploys the behavioral intelligence capabilities that differentiate AI-powered detection from legacy approaches. This includes device fingerprinting and telemetry collection establishing unique device signatures based on hardware characteristics, browser configurations, and behavioral patterns. It also encompasses behavioral biometrics deployment implementing keystroke dynamics, mouse movement analysis, and touch patterns for mobile applications.

Advanced implementations in this phase include deepfake and synthetic media detection. As generative AI makes it increasingly easy to create convincing fake identities and documents, detection capabilities must evolve accordingly. Liveness detection, document authenticity verification, and voice biometric analysis help ensure that remote customer interactions involve legitimate individuals rather than synthetic representations. Organizations with existing DevOps consulting services relationships can accelerate this phase through established CI/CD pipelines.

Phase 3: Automated Decisioning and Real-Time Response

Phase 3 implements the decisioning layer that translates risk signals into operational actions. This includes tiered risk scoring with defined thresholds: scores 0-30 represent low risk with automatic approval, scores 31-70 indicate medium risk requiring step-up authentication, and scores 71-100 flag high risk for block and review. These thresholds must be calibrated based on institution-specific risk appetite and customer experience requirements.

Automated response orchestration ensures that risk decisions trigger appropriate actions without manual intervention. A high-risk login attempt might automatically trigger SMS verification, while a suspicious transaction might be held pending customer confirmation via secure channel. The goal is sub-100ms response times that protect against fraud without introducing perceptible friction for legitimate transactions.

Phase 4: Governance, Explainability, and Continuous Improvement

The final phase establishes the governance framework required for regulatory compliance and operational sustainability. SAMA-compliant audit trails capture every decision, the data inputs that informed it, and the model reasoning that produced the outcome. This explainability is not optional - regulators require the ability to understand why specific transactions were flagged or approved.

Reason code generation provides human-readable explanations for fraud decisions. When a transaction is blocked, customers and front-line staff need clear explanations. When regulators audit decisions, they need documentation that demonstrates appropriate risk assessment. This phase also establishes model monitoring and retraining pipelines that ensure detection capabilities remain current as fraud patterns evolve. Financial institutions exploring AI model governance frameworks should prioritize this phase for regulatory sustainability.

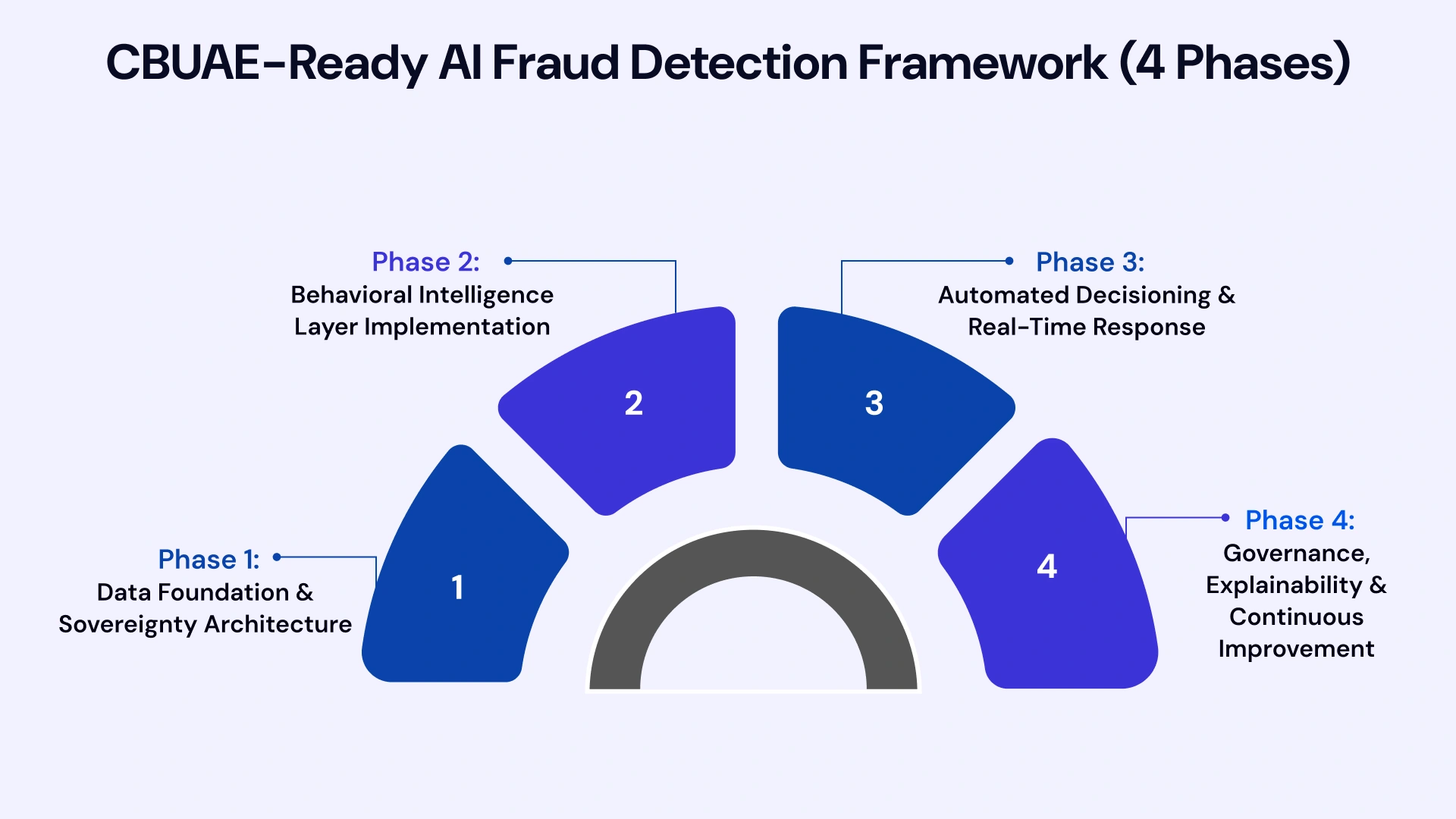

ROI Analysis: How AI Reduces Fraud Losses and Opex in GCC Banks

Investment in AI fraud detection must be justified through demonstrable return on investment. While specific ROI varies based on institution size, current fraud exposure, and implementation scope, the following analysis provides benchmark expectations based on industry data and documented GCC implementations.

Direct Fraud Loss Reduction: 40%+ Based on ACFE Data

False Positive Reduction: Up to 90% Operational Savings

Perhaps more significant than fraud loss reduction is the operational efficiency gained through false positive reduction. Traditional rule-based systems generate false positive rates that consume enormous investigation resources. AI-powered behavioral detection, by more accurately distinguishing legitimate from fraudulent activity, can reduce false positives by up to 90%. This translates directly to reduced headcount requirements in fraud operations, faster customer resolution, and improved customer experience through reduced friction.

AI Fraud Detection ROI Components

| ROI Category | Typical Improvement | Value Driver |

| Direct Fraud Losses | 40-60% reduction | Real-time detection of sophisticated attacks |

| False Positive Costs | Up to 90% reduction | Behavioral accuracy vs static rules |

| Investigation Labor | 50-70% efficiency gain | Automated triage and case prioritization |

| Customer Friction | 80-90% reduction | Risk-based authentication vs blanket challenges |

| Regulatory Penalties | Avoidance/Mitigation | Compliance with CBUAE/SAMA requirements |

MDR and AI-as-a-Service: Addressing the 25,000 Analyst Talent Shortage

The Middle East faces a significant cybersecurity talent shortage, with estimates indicating a gap of over 25,000 skilled professionals across the region. This shortage makes it challenging for financial institutions to build and maintain in-house AI fraud detection capabilities. Managed Detection and Response (MDR) services and AI-as-a-Service models provide alternatives that deliver enterprise-grade capabilities without requiring extensive in-house expertise.

These service models can reduce total cost of ownership by 40-60% compared to fully in-house implementations while providing access to specialized expertise and continuously updated detection capabilities. For mid-sized GCC financial institutions, this approach often provides the optimal balance of capability, cost, and operational simplicity. Organizations evaluating implementation approaches should consider fintech software development services that offer flexible engagement models.

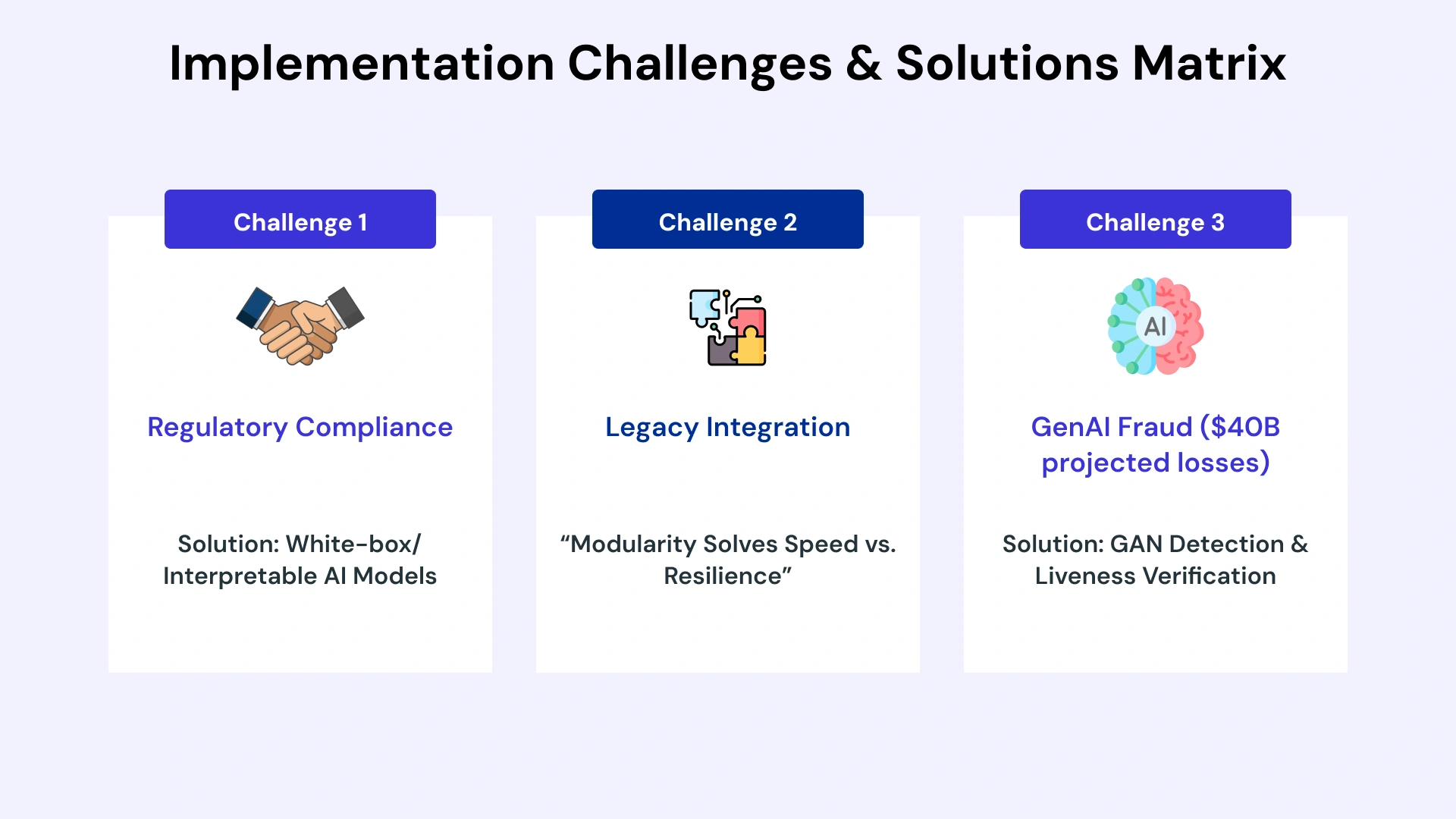

Implementation Challenges GCC Banks Should Expect (And How to Solve Them)

AI fraud detection implementation is not without challenges. Organizations that approach deployment with awareness of common obstacles are better positioned to navigate them successfully. The following represents the most significant challenges encountered in GCC implementations, along with proven mitigation strategies.

Challenge 1: Regulatory Compliance and Explainability Requirements

GCC regulators, particularly SAMA, require that AI-driven decisions be explainable. Many advanced machine learning models, particularly deep learning approaches, operate as black boxes that cannot readily explain their decisions. This creates a tension between model accuracy and regulatory compliance.

The solution lies in white-box or interpretable AI architectures that provide clear reasoning chains for every decision. Techniques include decision trees and gradient boosting models that inherently provide feature importance rankings, SHAP (SHapley Additive exPlanations) values that quantify each input's contribution to the output, and rule extraction methods that convert complex models into human-readable logic. These approaches sacrifice some theoretical accuracy ceiling for the practical requirement of regulatory compliance.

Challenge 2: Legacy System Integration and Data Quality

Many GCC banks operate core banking systems that were implemented decades ago and lack modern API capabilities. Extracting the real-time data required for AI fraud detection from these systems presents significant technical challenges. Additionally, data quality issues - inconsistent formats, missing fields, duplicate records - can undermine model accuracy.

Successful implementations typically allocate 30-40% of project timeline to data preparation and integration work. This includes data normalization pipelines that standardize formats across source systems, real-time streaming architectures that capture transaction data as it occurs, and data quality monitoring that identifies and addresses issues before they affect model performance. Partners with experience in legacy system modernization and cloud migration services can significantly accelerate this phase.

Challenge 3: Generative AI Fraud and Emerging Threat Vectors

The same generative AI capabilities that enable business innovation also empower sophisticated fraud. Deepfake videos for remote identity verification, AI-generated voices for phone-based fraud, and synthetic documents for account opening represent emerging threats that traditional detection cannot address. Projected losses from AI-powered fraud could reach $40 billion globally by 2027.

Counter-AI capabilities must be integral to modern fraud detection. This includes Generative Adversarial Network (GAN) detection that identifies synthetic media artifacts, liveness detection that verifies human presence in video verification, and document forensics that detect AI-generated or manipulated documents. The arms race between fraud AI and detection AI will accelerate, requiring continuous model updates and threat intelligence integration.

Decision Framework: How CROs and CTOs Should Evaluate AI Fraud Vendors

Selecting an AI fraud detection vendor or implementation partner requires structured evaluation across multiple dimensions. The following framework provides CROs and CTOs with criteria that differentiate solutions capable of delivering GCC-specific requirements from those that will fail to meet regulatory or operational expectations.

KPI Framework: Essential Metrics for Vendor Evaluation

Effective vendor evaluation requires specific, measurable criteria rather than general capability claims. The following KPIs should form the basis for any evaluation scorecard.

Vendor Evaluation KPI Framework

| KPI Category | Target Benchmark | Evaluation Criteria |

| False Positive Rate | <5% | Demonstrated reduction from baseline in POC |

| Detection Latency | <100ms | Real-time decisioning for transaction approval |

| Regulatory Compliance | CBUAE/SAMA certified | Documented compliance with Notice 3057 and SAMA frameworks |

| Model Update Frequency | Weekly minimum | Continuous learning with rapid adaptation to new threats |

| Explainability | Full audit trail | Human-readable reason codes for every decision |

| ROI Timeline | <18 months | Documented ROI from reference customers |

Build vs Buy vs Hybrid: Strategic Options for GCC Banks

GCC financial institutions face a strategic choice regarding AI fraud detection: build capabilities in-house, purchase commercial solutions, or pursue hybrid approaches. Each option presents distinct advantages and challenges.

Building in-house provides maximum customization and control but requires significant investment in data science talent, infrastructure, and ongoing maintenance. This approach is typically viable only for the largest regional banks with substantial technology budgets and long-term commitment to AI development.

Commercial solutions offer faster time-to-value and proven capabilities but may lack flexibility for institution-specific requirements. Integration with existing systems and data sovereignty compliance must be carefully evaluated.

Hybrid approaches, combining commercial platforms with custom development for institution-specific use cases, often provide the optimal balance for mid-sized GCC banks. This approach leverages vendor expertise for core capabilities while retaining flexibility for differentiated requirements. VLink's financial software development services support all three approaches with flexible engagement models.

Regional Compliance Checklist: UAE vs Saudi Arabia Requirements

While both UAE and Saudi Arabia require advanced fraud detection capabilities, specific regulatory requirements differ. Organizations operating across both jurisdictions must ensure compliance with both frameworks.

UAE requirements center on CBUAE Notice 3057 mandating real-time transaction monitoring and suspicious activity reporting, data residency within approved UAE or regional jurisdictions, and KYC/AML integration per CBUAE consumer protection standards.

Saudi Arabia requirements emphasize PDPL compliance for all personal data processing with explicit consent requirements, SAMA governance framework requiring explainable AI with documented decision rationale, and Vision 2030 alignment demonstrating contribution to national digital transformation goals.

The Future of Fraud Detection in UAE and Saudi Arabia

The AI fraud detection landscape continues to evolve rapidly. Understanding emerging trends enables financial institutions to make investment decisions that remain relevant as the technology matures. Organizations considering long-term generative AI development strategies should consider these trajectories.

The Death of SMS OTP: Behavioral Authentication by 2026

SMS-based one-time passwords (OTP) have been the default second factor for banking authentication across the GCC. However, SIM swapping attacks, SS7 network vulnerabilities, and social engineering have rendered SMS OTP increasingly unreliable. Regulators and industry bodies are signaling a transition away from SMS authentication toward behavioral and biometric alternatives.

By 2026, expect behavioral authentication - leveraging device fingerprints, keystroke dynamics, and interaction patterns - to replace SMS OTP as the primary additional authentication factor for GCC banking. This transition will require investments in behavioral intelligence infrastructure that forward-thinking institutions are making now.

FRAML Convergence: Unified Financial Crime Platforms

The historical separation between fraud detection and anti-money laundering operations is dissolving. Sophisticated financial crime often involves both fraud and money laundering elements, and siloed detection creates blind spots. Unified FRAML platforms that share behavioral profiles, alert correlation, and case management across both functions will become the standard architecture.

This convergence offers significant efficiency benefits - reduced duplicate investigations, improved detection through shared intelligence, and streamlined regulatory reporting. GCC institutions currently operating separate fraud and AML functions should begin planning convergence roadmaps.

Hyper-Personalized Risk Scoring: Individual Customer Risk Profiles

Current risk scoring typically applies segment-level risk profiles - different thresholds for retail versus corporate, or domestic versus international transactions. The next generation of AI fraud detection will enable truly individualized risk profiles that adapt in real-time to each customer's unique behavioral patterns.

This hyper-personalization delivers dual benefits: reduced friction for low-risk customers whose transactions align with their established patterns, and enhanced detection for anomalies that deviate from individual baselines. The technology exists today; implementation requires the behavioral data infrastructure discussed in earlier sections.

Conclusion: Turning Compliance into Competitive Advantage

AI-powered fraud detection has evolved from competitive differentiator to operational necessity for GCC financial services. Regulatory requirements from CBUAE and SAMA have effectively mandated advanced detection capabilities, while the sophistication of modern fraud - including AI-generated attacks - has rendered legacy rule-based systems obsolete.

Yet the institutions achieving greatest success view this transformation not as compliance burden but as strategic opportunity. Reduced fraud losses flow directly to bottom-line improvement. Reduced false positives enhance customer experience and operational efficiency. Behavioral intelligence creates competitive moats that differentiate customer experience. The banks that execute this transition effectively will emerge with stronger customer relationships, lower operating costs, and sustainable competitive advantages.

The technology exists. The frameworks are proven. The regulatory environment demands action. The question for GCC CROs and CTOs is not whether to implement AI-powered fraud detection, but how quickly and effectively they can execute the transformation.

Shivisha Patel

Shivisha Patel