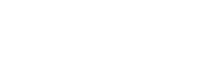

AI model governance in the BFSI sector is crucial for enabling the secure, ethical, and compliant deployment of AI across banking, financial services, and insurance domains. This is particularly important as the sector experiences surging cloud adoption trends, increasing regulatory scrutiny, and a rapid increase in AI/ML adoption in other financial verticals.

The convergence of advanced machine learning and critical financial decision-making necessitates a robust, proactive framework to manage risk, ensure fairness, and uphold public trust.

This comprehensive blog will examine the key components, best practices, and strategic imperatives for establishing a world-class AI governance framework across the banking, insurance, and broader financial sectors.

The Imperative for AI Model Governance in BFSI

However, this surge brings significant risks, such as model bias, data drift, regulatory penalties, and reputational damage, demanding robust AI model governance frameworks that span the entire AI lifecycle management in finance, from development and deployment to oversight, continuous monitoring, and model drift detection.

A need for agility and efficiency drives the shift. Cloud adoption trends in BFSI have made cutting-edge AI Development Services more accessible; however, without effective AI governance in financial services, these technological advantages can become high-stakes liabilities. Regulators are increasingly focused on algorithmic transparency, demanding greater rigor in model risk management within the BFSI sector.

For institutions operating in capital markets, where IT services are critical and decisions are made in milliseconds, a failure in AI compliance in BFSI can have catastrophic consequences. Establishing a sophisticated AI governance in the BFSI structure is no longer optional; it is the foundation for sustainable digital growth.

What is AI Model Governance in BFSI?

AI model governance in BFSI involves the structured collection of policies, controls, and best practices to ensure that AI models are ethical, explainable, compliant, and resilient throughout their lifecycle. A practical AI governance framework in banking and financial services encompasses the technical, operational, and ethical guardrails necessary to scale AI responsibly. It ensures that every automated decision aligns with the firm's values and regulatory mandates.

At its core, AI governance in BFSI involves managing the inherent risks associated with algorithmic decision-making. This discipline is distinct from traditional IT governance because it addresses the non-deterministic nature of machine learning models.

Key elements of a world-class framework include:

- Comprehensive policy frameworks and oversight committees (AI oversight committee)

- Clear accountability and role assignments, embedding responsible AI in banking.

- Documentation and transparent explainability protocols (AI transparency and explainability).

- Standardized model risk management in BFSI and rigorous AI model validation.

- Continuous monitoring and model drift detection in AI, and proactive bias detection and mitigation in generative AI.

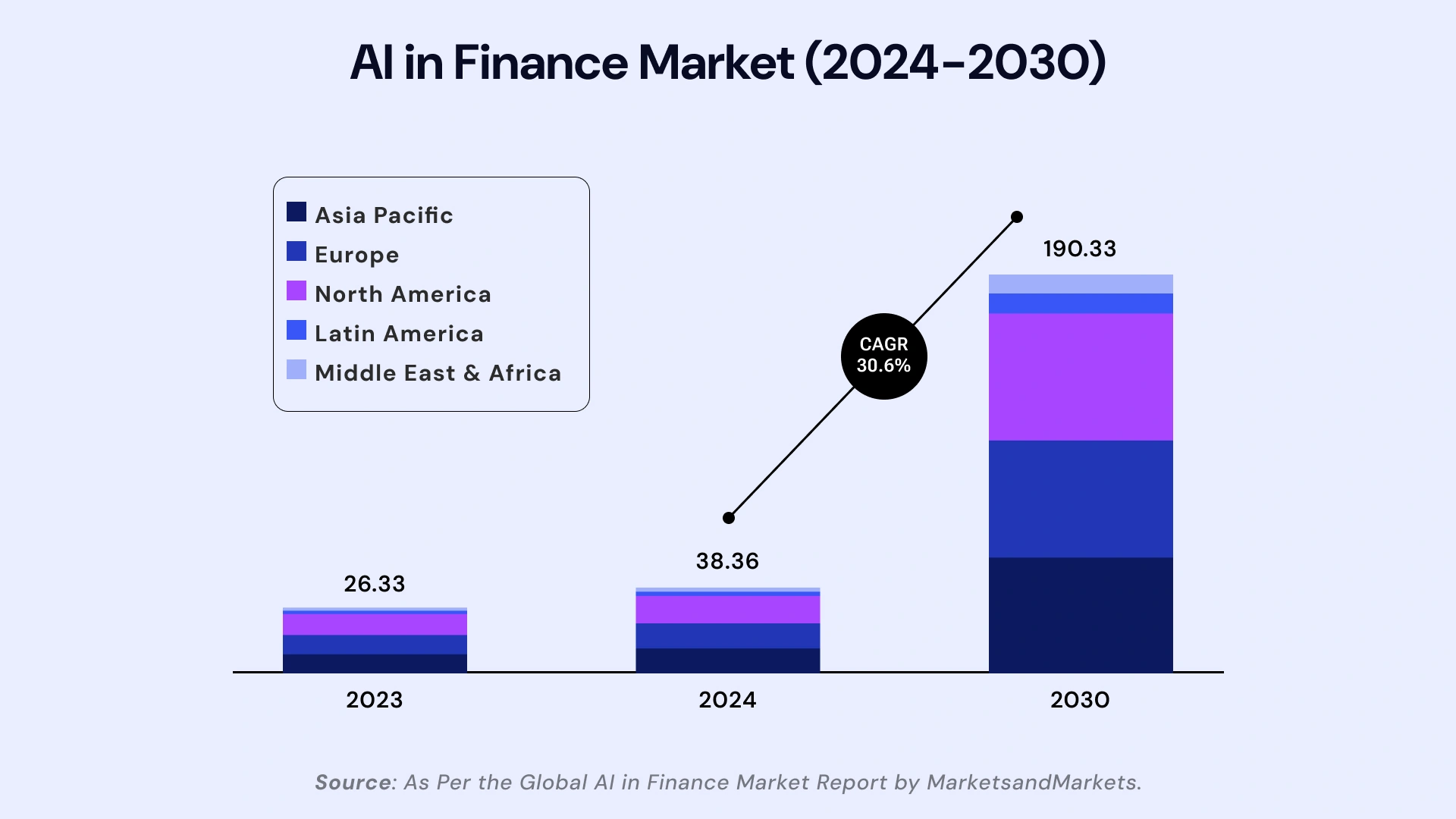

Key Components of AI Model Governance

Effective AI model governance in BFSI relies on the synergy of five critical components, each playing a vital role in securing the AI lifecycle management in finance. These components transform abstract regulatory requirements into concrete, actionable processes.

1. AI Governance Policy Framework

A unified AI policy framework forms the backbone of AI governance in financial services. Top institutions have established cross-functional committees spanning legal, technology, compliance, and ethics to oversee AI lifecycle management in finance.

This human-in-the-loop governance in financial AI models, combined with continuous monitoring and model drift detection in AI technologies, is crucial for integrating ethical AI into banking and insurance operations.

The framework defines roles, responsibilities, and decision-making authority, ensuring every model has an accountable owner. It establishes criteria for AI model validation and acceptance into production, making it a foundational element for achieving AI compliance in the BFSI sector.

2. Model Risk Management in BFSI

Model risk management in BFSI is a regulatory focus, ensuring that every AI/ML system deployed for AI-driven decision-making in BFSI or risk assessment undergoes independent validation and stress testing of AI models.

This practice is crucial in preventing errors that could result in financial losses or unfair treatment. Many banks maintain dedicated model inventory databases and employ third-party auditors to review the algorithmic decision logic, thereby reducing the risks of compliance breaches.

Robust MRM covers the entire model lifecycle, from initial design and data sourcing to post-deployment performance tracking and eventual retirement. It is the practical application of AI governance best practices in the BFSI sector.

3. Data Lineage and AI Lifecycle Management

Robust data lineage to support AI governance practices tracks the origin, handling, and transformation of data used to help AI models, thereby enhancing transparency and accountability.

You cannot govern a model without governing its data. Lifecycle management encompasses model development, deployment, upgrades, and retirement, and is a critical aspect of operational resilience in AI systems.

This component ensures that model performance is reproducible and that every input and output can be traced back to its source, which is particularly important for financial AI models used in areas such as fraud detection and credit underwriting. Effective AI lifecycle management in finance serves as the technical backbone of broader AI model governance in the BFSI sector.

4. Explainable AI and Transparency

Regulators are increasingly mandating explainable AI in financial services, requiring transparent and auditable decision trails for credit scoring, insurance underwriting, and trading algorithms. AI transparency and explainability are non-negotiable for public trust.

Explainability, alongside routine AI model audit and documentation, increases trust and supports customer rights for recourse. Providing clear explanations for why a loan was denied or a claim was flagged as suspicious is a key requirement of responsible AI in banking and insurance.

Furthermore, explainability aids in the crucial task of bias detection and mitigation in generative AI systems by revealing the features driving biased outcomes.

5. Continuous Monitoring and Model Drift Detection

Modern AI model governance in BFSI utilizes automated detection to monitor AI model drift, performance degradation, or bias creep that may result from changes in inputs or market conditions.

Continuous monitoring and model drift detection in AI enable swift remediation, supporting compliance with regulations such as the EU AI Act BFSI requirements, and maintaining regulatory reporting standards.

For volatile areas like AI/ML adoption in capital markets, continuous monitoring is vital for maintaining operational resilience in AI systems. Systems for real-time alerts and automated performance checks ensure that models stay within acceptable risk and fairness thresholds, a core tenet of a practical AI governance framework in banking.

AI Governance Across Banking, Financial Services, and Insurance

While the core principles of AI governance in BFSI remain constant, their application varies significantly across the sector's key segments.

AI Governance Framework in Banking

Banks rely on sophisticated AI governance frameworks to underpin the adoption of AI/ML in capital markets and risk management. Frameworks standardize AI model validation, AI model audit processes, and ethical oversight, which are necessary for deploying generative AI use cases, such as document processing and predictive analytics.

The focus here is on model risk management in the BFSI sector, as banks are highly regulated institutions. Ethical AI in banking includes rigorous testing to prevent bias in lending and recruitment models, ensuring alignment with consumer protection laws. Furthermore, an AI oversight committee ensures top-down accountability for all deployed models, particularly those driving AI-driven decision-making in the BFSI sector.

AI Governance in Financial Services

AI governance in financial services focuses on broad regulatory compliance (GDPR, CCPA, DORA, and the EU AI Act), risk-based model tiering, and transparent processes for model inventory management, bias detection, and mitigation in generative AI, as well as continuous improvement. The scope is wider, encompassing fintechs and investment firms, which often utilize cutting-edge AI Development Services.

A key differentiator is the emphasis on AI for financial regulations, ensuring that algorithmic trading and portfolio management systems adhere to market stability rules and client suitability requirements. This necessitates robust AI compliance in the BFSI sector across complex, international jurisdictions.

AI Governance in Insurance

Insurance institutions face unique challenges in AI governance within the BFSI sector due to the complexity of underwriting, fraud detection, and claims workflows. The NAIC’s proposed AI Model Law and other regulatory initiatives mandate fairness, auditability, and customer-centric transparency, creating demand for human-in-the-loop governance in financial AI models and external AI model audits.

AI governance in insurance places a strong emphasis on explainable AI in financial services, particularly in justifying premium adjustments or claim denials. Responsible AI in banking’s counterpart in insurance ensures that protected characteristics are not unfairly influencing algorithmic outcomes, demanding constant vigilance and specific rules within the AI policy framework.

Cloud Adoption Trends and AI Development Services in BFSI

The rapid adoption of cloud-native AI Development Services has increased agility for banks and insurers while amplifying governance challenges related to data security, privacy, and access control. Cloud adoption trends in BFSI sector are revolutionizing operations, but they also demand a renewed focus on AI model governance in the BFSI sector for managing third-party risk.

Key cloud adoption trends in BFSI include:

- Migration of legacy analytics to managed cloud platforms to benefit from scalable compute resources.

- Use of containerized AI model environments for rapid scaling and deployment, which require strong version control and AI lifecycle management in finance.

- Implementation of granular role-based access and security frameworks to ensure data lineage to support AI governance and compliance.

- Integration of explainable AI in financial services and monitoring tools as part of AI/ML adoption in capital markets DevOps pipelines.

These trends enhance operational resilience in AI systems and improve model performance, but require robust policy oversight and continuous regulatory compliance checks, especially for cross-border operations and third-party risks introduced by cloud vendors.

The AI governance framework in banking must explicitly address data residency and security protocols for cloud-hosted models. Leveraging modern capital markets IT services that specialize in cloud-based AI deployments can simplify the path to compliance.

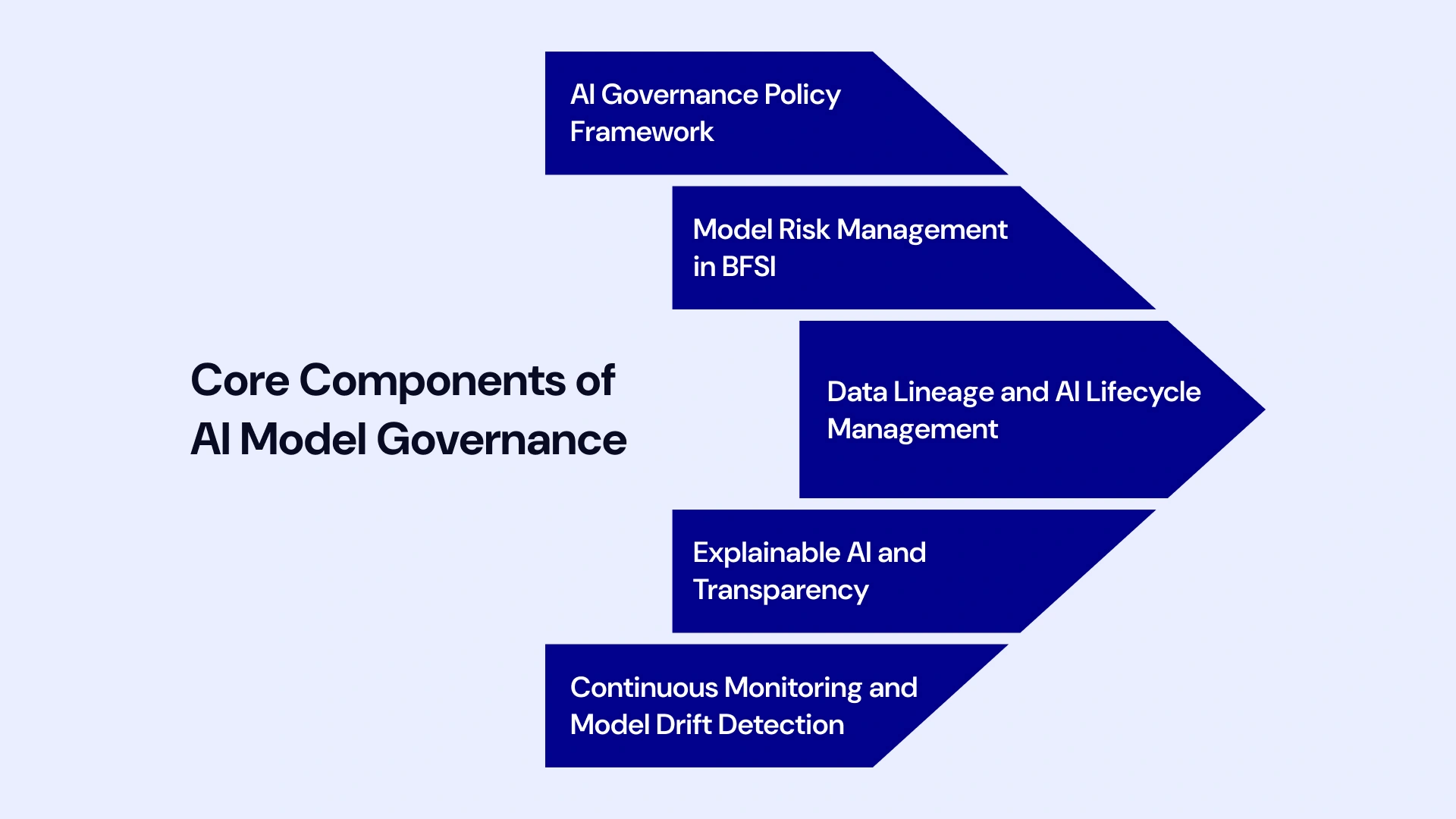

Best Practices for AI Governance in BFSI

Implementing a successful AI model governance framework in the BFSI sector requires a commitment to the proactive and continuous integration of governance into the development and operations workflow.

To move beyond mere checklist compliance and achieve genuine responsible AI in banking and insurance, top-tier financial institutions must embed these practices across the entire AI lifecycle management in finance:

1. Establish a High-Accountability AI Oversight Committee (AOC)

The AOC must be a cross-disciplinary body comprising senior leaders from Legal, Compliance, Ethics, Risk, and Technology. This committee serves as the ultimate arbiter, responsible for overseeing ethics, regulatory compliance, and business risks.

It ensures the AI policy framework is consistently adhered to, standardizes the definition of ethical AI in banking, and drives top-down accountability for all AI-driven decision-making in the BFSI sector. This centralization ensures strategic alignment with the firm's overall risk appetite.

2. Mandate Rigorous Model Validation and Stress Testing

Go beyond basic testing. Conduct regular, independent AI model validation and stress testing in AI models using challenger data sets that simulate extreme market conditions or economic shocks.

This practice is critical for robust model risk management in BFSI, ensuring that models remain strong and reliable, particularly those underpinning capital markets IT services, and lending decisions. The goal is to proactively identify and mitigate tail risks before they materialize into system-wide failures, ensuring operational resilience in AI systems.

3. Implement Advanced Continuous Monitoring & Drift Detection

Automated, real-time, continuous monitoring and model drift detection in AI is non-negotiable. Deploy systems that track performance decay, data quality shifts, and critically, unintended bias creep.

Automated detection for AI model drift monitoring, anomaly detection, and bias mitigation in generative AI is essential, supported by visual dashboards and automated alerts. This ensures models maintain their intended performance and fairness in production, upholding AI compliance in BFSI immediately.

4. Build Transparent Data Lineage and Comprehensive Audit Trails

Treat data as a governed asset. Document the data lineage to support AI governance by maintaining comprehensive records of data sources, transformations, model releases, performance logs, and AI model audit trails.

This level of transparency is vital for regulatory scrutiny and forms the backbone of AI transparency and explainability. Ensuring full traceability from raw data input to final algorithmic output is key to proving compliance and facilitating rapid investigation.

5. Utilize Human-in-the-Loop (HITL) for Critical Decisions

Integrate human-in-the-loop governance in financial AI models for high-impact decisions (e.g., complex credit denials, large insurance claims, high-risk fraud flags). This leverages the efficiency of AI while retaining human judgment and ultimate accountability.

Employ explainable AI in financial services tools to provide human reviewers with clear rationales for AI recommendations, thereby supporting customer inquiries and third-party audits. This blend of technology and human oversight is the ultimate safeguard for responsible AI in the banking industry.

6. Proactively Align with Evolving Global Regulations

Adopt a "future-proof" approach to compliance. Regularly update the AI governance framework in banking and insurance across departments to align not only with current laws, but also with emerging global standards, such as the EU AI Act BFSI compliance, the NAIC Model Law, and the RBI draft guidelines.

This proactive stance ensures your AI compliance in BFSI remains robust and avoids costly retrofitting, cementing your reputation as a leader in ethical AI governance in financial services.

Pro Tips:- The rise of Generative AI (GenAI) necessitates new, tailored best practices for AI model governance in BFSI, particularly in terms of security and compliance with financial regulations. Best practices for GenAI security governance are now a critical sub-discipline.

AI Governance Best Practices for GenAI Security and Financial Regulations:-

- Enforce Proactive AI Compliance in BFSI: Institute regular audits, ethics reviews, and real-time reporting capabilities to adhere to evolving laws like the EU AI Act BFSI compliance.

- GenAI Security Governance: Implement specialized controls to prevent data leakage and intellectual property risks when using LLMs. This includes secure API management and data sanitization protocols.

- Real-Time Monitoring and Decision Support: Utilize predictive analytics for real-time fraud detection and compliance monitoring in capital markets IT services, enabling rapid AI-driven decision-making in BFSI.

- Bias Mitigation in Generative AI: Conduct rigorous bias detection and mitigation in generative AI applications using both algorithmic and human-in-the-loop governance in financial AI models review.

- Maintain Comprehensive Inventory: Keep up-to-date inventory and lineage records for all financial AI models, including those for inventory management, trading, and customer engagement, to support data lineage and AI governance.

- Operational Resilience and Stress Testing: Enforce operational resilience in AI systems through periodic stress testing of AI models, incident simulations, and disaster recovery plans for AI systems, particularly those hosted in the cloud.

- Continuous Feedback Loop: Set up continuous AI model drift monitoring and integrate feedback from business users, compliance officers, and customers to inform the AI lifecycle management in finance.

Challenges of AI Model Governance in BFSI

Despite the clear benefits, establishing and maintaining effective AI governance in BFSI presents several complex challenges that leaders must proactively address.

| Challenge | Description | Governance Solution |

| Complex Regulatory Landscape | BFSI companies face overlapping local (e.g., US state-level) and global regulations for AI, such as the DORA and EU AI Act, which complicates adherence across borders. | Establish a centralized AI oversight committee and create a unified AI policy framework that maps all requirements to a single set of controls. |

| Bias Detection and Mitigation in Generative AI | Financial AI models, particularly those utilizing large language models, must proactively address potential bias in data sources and algorithms to mitigate legal, ethical, and reputational risks. | Mandate rigorous pre- and post-deployment algorithmic impact assessments and dedicated tooling for bias detection and mitigation in generative AI. |

| Operational Resilience | Ensuring that AI systems withstand market shocks, evolving threats, and technology failures, especially with critical assets managed in the cloud. | Enforce regular stress testing in AI models, incident simulations, and robust disaster recovery plans as part of the AI lifecycle management in finance. |

| Data Quality and Lineage | Poor data handling can introduce errors or bias, making robust data governance practices fundamental for model reliability and regulatory adherence. | Implement tools and policies for automated data lineage to support AI governance and enforce mandatory data quality gates before model training. |

| Scalability | With the accelerating adoption of AI/ML in capital markets, insurance, and banking, AI governance in BFSI structures must scale in tandem with the increasing volumes and complexity of model deployments. | Utilize automated MLOps and AI Development Services platforms that embed governance and AI model audit trails natively. |

Examples of AI Model Governance in BFSI

Successful organizations are transforming their AI governance in financial services from a compliance burden into a competitive advantage.

| BFSI Segment | Governance Initiatives | Impact/Outcome |

| Banking | Model Risk Committees, Explainable AI in financial services checks, and mandatory AI model validation. | Reduced bias in lending, enhanced compliance with fair lending acts, and faster regulatory AI model audits. |

| Capital Markets IT Services | Automated AI model drift monitoring and detection systems, high-frequency stress testing in AI models. | Improved model performance in volatile markets, early detection of market shocks, and greater operational resilience in AI systems. |

| Insurance | NAIC Model Law frameworks, external AI model audits, and human-in-the-loop governance in financial AI models for complex claims. | Higher customer trust, improved transparency for underwriting decisions, and reduced litigation risk. |

| Cross-sector | AI oversight committees, GenAI security governance, and proactive bias detection and mitigation in the training of generative AI. | Fewer ethical breaches, proactive remediation of biases, and more substantial alignment with responsible AI in banking. |

| Risk Management | An AI model for inventory management is utilized in conjunction with predictive analytics for credit default and liquidity risk. | Enhanced AI-driven decision-making in BFSI and better preparedness for financial crises, central to AI for financial regulations. |

The Future of AI Model Governance in BFSI

The next era of AI in banking, financial services, and insurance is characterized by the tension between the rapid adoption of Generative AI (GenAI) and an increasingly prescriptive global regulatory landscape.

The future of AI model governance in BFSI will shift from simply validating static models to establishing adaptive, risk-proportionate, and automated compliance systems capable of governing sophisticated, autonomous AI agents.

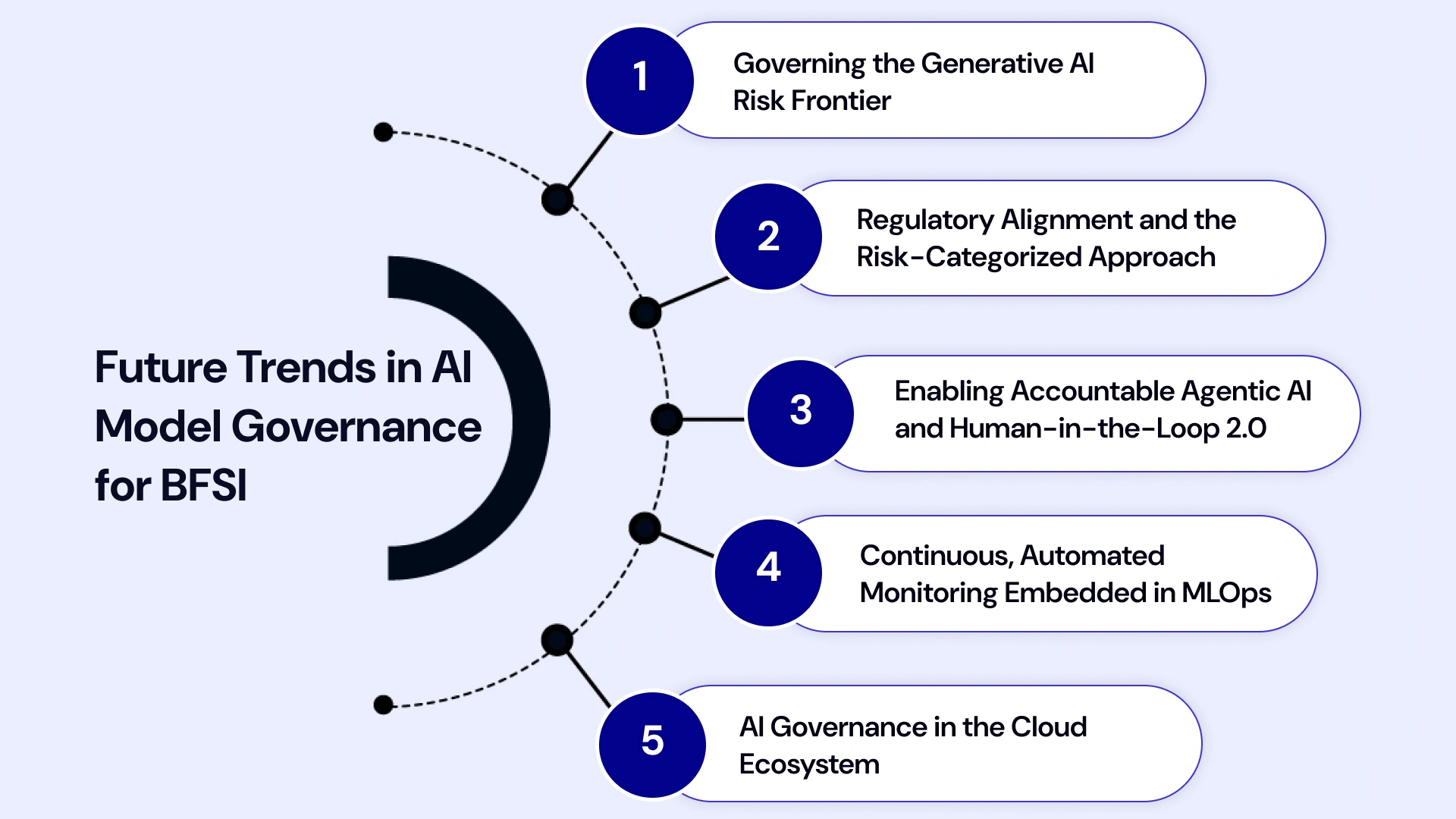

This transformation requires financial leaders in the US and Canada to look beyond the current AI governance framework in banking and prepare for five crucial trends:

Governing the Generative AI Risk Frontier

GenAI introduces novel risks like "hallucinations" and intellectual property concerns. Future best practices for GenAI security governance will mandate the use of automated AI model audit trails and Retrieval-Augmented Generation (RAG) guardrails. Proactive bias detection and mitigation in generative AI will be standard, focusing on the fairness and truthfulness of the generated content to ensure responsible AI in banking.

Regulatory Alignment and the Risk-Categorized Approach

Global standards, such as the EU AI Act BFSI compliance, are forcing institutions to adopt a risk-categorized approach to AI governance in financial services. High-risk applications require the most stringent validation and stress testing of AI models. This requires a centralized AI compliance platform in the BFSI sector that can simultaneously interpret and apply diverse mandates for AI in financial regulations.

Enabling Accountable Agentic AI and Human-in-the-Loop 2.0

As AI Development Services produce autonomous AI capable of AI-driven decision-making in BFSI, accountability is complicated. The future requires an evolution of human-in-the-loop governance in financial AI models, where human oversight is mandated at critical intervention points. XAI tools will provide instant, auditable rationales, ensuring operational resilience in AI systems and maintaining human final authority.

Continuous, Automated Monitoring Embedded in MLOps

The volatility of markets and the rapid adoption of AI/ML in capital markets necessitate continuous vigilance. AI lifecycle management in finance will rely on automated constant monitoring and model drift detection in AI systems. This proactive AI model drift monitoring will constantly scan for subtle shifts in fairness and compliance posture, which is critical for capital markets IT services.

AI Governance in the Cloud Ecosystem

Accelerated cloud adoption trends in the BFSI sector are leading to a greater reliance on third-party vendors and cloud environments. The future demands tighter governance over the entire ecosystem, requiring cloud providers to support cross-cloud data lineage to support AI governance and native governance features. Managing third-party risk and ensuring data residency will become a central focus for the AI oversight committee.

Elevate AI Governance and Compliance with VLink Expertise

The journey to world-class AI model governance in BFSI is complex and requires specialized expertise that bridges regulatory compliance, advanced AI development services, and cloud security. For US and Canadian financial institutions, navigating the evolving landscape of the AI governance framework in banking and achieving robust AI compliance in BFSI while maximizing ROI is a monumental task.

VLink’s dedicated team domain expertise in creating, validating, and governing highly-regulated AI systems. Our services focus on establishing and operationalizing the five core components of AI model governance in BFSI:

- Custom AI Policy Framework Development: Creating an AI policy framework tailored to your organizational structure and compliant with all local and international regulations (including support for EU AI Act BFSI compliance).

- Model Risk Management (MRM) as a Service: Implementing independent model risk management in BFSI, conducting mandatory stress testing in AI models, and providing AI model validation and AI model audit services.

- AI/MLOps & Lifecycle Management: Deploying automated platforms that embed data lineage to support AI governance, continuous monitoring, and model drift detection in AI, and version control for seamless AI lifecycle management in finance.

- Explainable AI (XAI) Implementation: Integrating explainable AI in financial services tools to ensure AI transparency and explainability for all AI-driven decision-making in BFSI.

- Responsible AI & Ethical AI Audits: Proactive bias detection and mitigation in generative AI and system-wide audits to ensure adherence to responsible AI in banking and ethical AI in banking principles.

Leverage VLink’s experience in cloud adoption trends in BFSI and capital markets IT services to secure your AI future. We empower you to deploy AI at scale, confidently, and compliantly.

Conclusion

The future of banking, financial services, and insurance is inextricably linked to the successful and responsible adoption of AI. AI Model Governance in BFSI is the crucial discipline that turns the promise of AI into reality by mitigating the associated risks.

By prioritizing a comprehensive AI governance framework in banking, supported by an active AI oversight committee, robust model risk management in the BFSI sector, and state-of-the-art continuous monitoring and model drift detection in AI, financial institutions in the US and Canada can achieve unprecedented levels of operational resilience in their AI systems.

The shift to responsible AI in banking is not merely a compliance task; it is a strategic imperative for maintaining customer trust, ensuring fairness through explainable AI in financial services, and maximizing the business value derived from all AI Development Services. Embrace comprehensive AI governance in BFSI today to ensure your organization is prepared for the next wave of financial innovation and regulatory scrutiny.

Unlock secure, compliant, and high-ROI AI for your banking, insurance, or financial services operation. Elevate AI model governance and operational resilience in AI systems in the age of cloud and generative AI. Connect with our team of AI governance specialists to book a free consultation or strategy session—tailored for US and Canadian BFSI leaders.

Shivisha Patel

Shivisha Patel