The Gulf Cooperation Council region is no longer just consuming technology—it is building the infrastructure that will power the next generation of artificial intelligence. For CIOs, CTOs, and infrastructure leaders across Saudi Arabia and the UAE, the conversation has shifted dramatically. This is no longer about cloud migration. This is about sovereign AI scaling.

The numbers tell a compelling story. The AI data center market in the Middle East is projected to reach $6.6 billion by 2030, growing at a CAGR of 27.2%. Saudi Arabia commands approximately 31.6% of regional revenue share, while UAE live capacity has hit 376MW with vacancy rates plummeting to a record low of 2.4%. Demand is outpacing supply at an unprecedented rate.

Yet scaling AI infrastructure in this region presents a unique trilemma that traditional data center playbooks cannot address. Extreme power density requirements from next-generation GPUs like NVIDIA H100s and Blackwell chips demand rack densities of 50–100kW+—far beyond what conventional air-cooled facilities can support. Ambient temperatures regularly exceeding 50°C make standard evaporative cooling both water-intensive and unsustainable. And strict data sovereignty laws under KSA PDPL and UAE PDPL mandate that national data assets remain physically within borders, often requiring entirely separate architectural zones.

This article examines how the region's mega-projects are solving these challenges—and what lessons enterprise leaders can apply to their own AI infrastructure roadmaps.

Why Data Center Scalability Has Become a National Priority in KSA & UAE

Data center scalability in the Middle East has evolved from an IT concern to a matter of national economic strategy. Both Saudi Arabia and the UAE have recognized that sovereign control over AI compute capacity is fundamental to their post-oil economic visions.

Saudi Arabia's roadmap is particularly ambitious. Riyadh aims to surpass 1,300MW of data center capacity by 2030. The Public Investment Fund has committed to partnerships that dwarf previous regional investments—including a $10 billion joint venture with Google to establish a global AI hub within the Kingdom. These are not incremental expansions. They represent a fundamental repositioning of the nation as a compute superpower.

Underlying this expansion is the rise of the AI Factory paradigm. Traditional general-purpose data centers are giving way to purpose-built facilities optimized for AI training and inference workloads. These facilities must support power densities that legacy infrastructure simply cannot accommodate—often requiring complete rethinking of cooling, power distribution, and architectural design.

Consider the challenge facing a UAE energy major that recently attempted to accelerate its AI training infrastructure. Despite having access to substantial capital and talent, the project hit a 3MW cooling bottleneck that traditional solutions could not resolve. The facility's air-cooling systems, designed for conventional server workloads, were fundamentally inadequate for GPU-dense AI racks generating heat at rates three to four times higher than expected.

This is the new reality for infrastructure leaders across the region. The question is no longer whether to scale AI infrastructure—it is how to do so without being constrained by heat, power, or regulatory architecture.

The Scalability Trilemma — Heat, Power & Law

For infrastructure leaders planning AI-ready data centers in Saudi Arabia and the UAE, three interconnected constraints define every strategic decision. Mastering this trilemma—heat, power, and law—separates successful deployments from costly failures.

Heat — Why Liquid Cooling Is Now Non-Negotiable

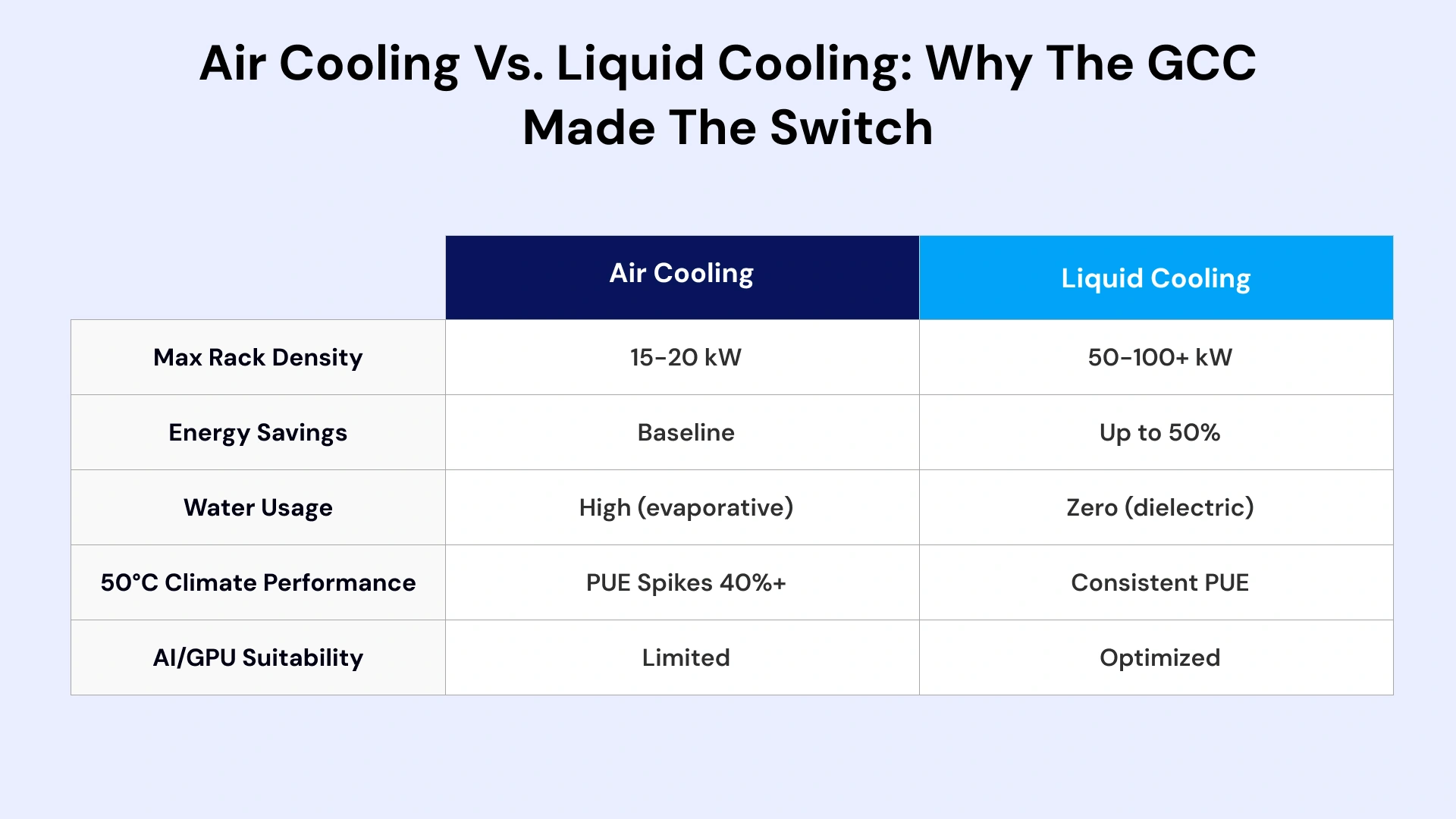

Air cooling is dead for AI workloads in the Gulf. This is not hyperbole—it is physics. When ambient temperatures regularly exceed 50°C and rack densities push past 50kW, traditional chiller-based systems fail on multiple dimensions. They consume excessive power, driving Power Usage Effectiveness ratios to unsustainable levels. They require water in a region where water scarcity is a critical constraint. And they simply cannot remove heat fast enough to prevent thermal throttling in dense GPU clusters.

The regional response has been decisive. New builds across KSA and UAE are defaulting to liquid cooling readiness from the design phase. Direct-to-chip cooling systems and full immersion solutions are moving from experimental deployments to standard specifications.

The performance gains are substantial. Liquid cooling reduces energy consumption by up to 50% compared to equivalent air-cooled deployments while enabling rack densities that would be impossible otherwise. For infrastructure leaders, this translates directly to more AI compute per square meter—a critical metric when land and construction costs in premium locations continue to escalate.

Waterless cooling innovations are accelerating adoption further. Dielectric fluid systems eliminate the regulatory and sustainability concerns associated with water-based solutions, making them particularly attractive for deployments in water-restricted zones.

A major Saudi telco provides an instructive example. Their existing data center infrastructure experienced severe PUE spikes during summer months, with efficiency ratios degrading by 40% when ambient temperatures exceeded 45°C. After retrofitting high-density zones with closed-loop liquid cooling, the facility maintained consistent performance regardless of external conditions—while reducing overall cooling energy consumption by 35%.

Power — The New AI Infrastructure Bottleneck

Power availability has emerged as the primary constraint on AI infrastructure expansion across the GCC. Transmission infrastructure takes four or more years to build. In this context, securing power delivery by 2027 is now considered fast.

The scale of power requirements is staggering. A single high-density AI training cluster can consume more electricity than a small city. When multiplied across the capacity buildouts planned for Riyadh and Dubai, the demand curve far outpaces grid expansion timelines.

Nuclear power is emerging as a serious consideration for baseload requirements. The UAE's Barakah Nuclear Plant has demonstrated the viability of nuclear generation in the region, and both the UAE and Saudi Arabia are exploring Small Modular Reactors as dedicated power sources for gigawatt-scale data campuses.

For enterprise infrastructure leaders, the strategic imperative is clear: early action on power procurement is essential. Signing Power Purchase Agreements now, even for capacity needed in 2027 or 2028, positions organizations ahead of competitors who will face constrained options as demand accelerates. Capacity forecasting must extend further into the future than traditional planning cycles—five-to-seven-year horizons are becoming standard for major deployments.

Law — Sovereignty Requirements Become Architecture Constraints

Data sovereignty in the GCC is not a compliance checkbox—it is an architectural constraint that fundamentally shapes infrastructure design. The KSA Personal Data Protection Law and UAE Federal PDPL impose requirements that cannot be satisfied by simply extending a public cloud region. Physical data residency for sensitive national assets demands distinct infrastructure zones.

The practical impact on architecture is significant. Organizations must design for multiple data classification tiers, each with different hosting requirements. Public zones can leverage hyperscaler regions from Oracle, Google, or Microsoft for non-sensitive applications. Sovereign zones must host AI training data and citizen information on-premises or through certified sovereign cloud providers like G42 Cloud or CNTXT. Air-gapped environments are required for the most sensitive government and defense workloads.

Free-zone regulations add additional complexity. DIFC and ADGM operate under distinct frameworks that must be navigated carefully when designing multi-zone architectures. The emerging "Data Embassies" concept in KSA represents a potential evolution—creating virtual zones where foreign data laws apply within specific physical boundaries while maintaining strict localization for national data.

Infrastructure leaders leveraging VLink's Data Center Consulting Services typically begin with comprehensive data classification exercises. Understanding exactly which data assets fall under each regulatory tier prevents costly architectural rework later in the deployment cycle.

Lessons From Saudi & UAE Mega-Projects

The region's flagship infrastructure projects offer concrete patterns that enterprise leaders can adapt for their own scaling challenges. These are not theoretical frameworks—they are battle-tested approaches validated at unprecedented scale.

G42 (UAE) — Building a Sovereign Hyperscaler Through Partnership

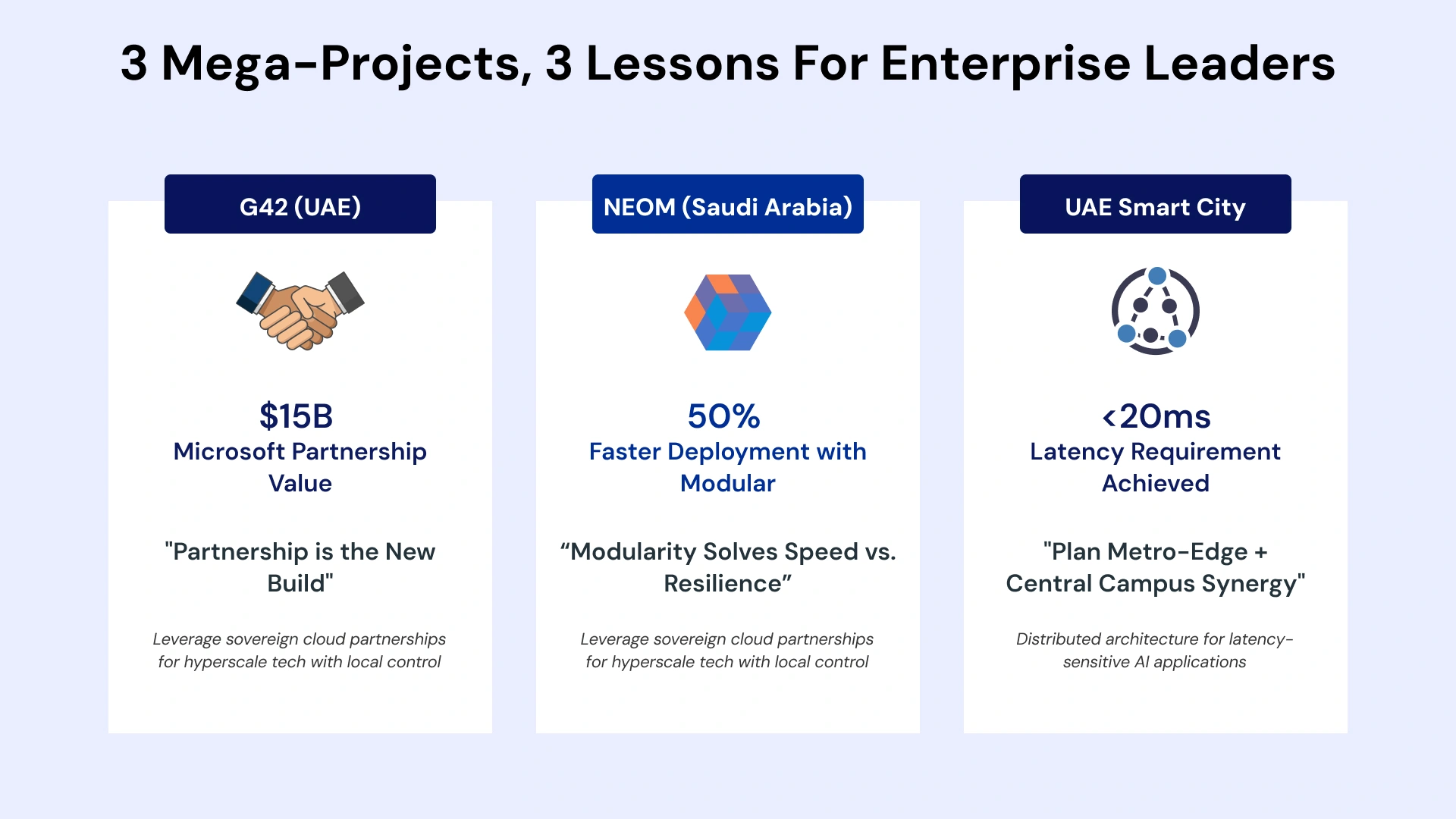

G42's approach to building sovereign AI infrastructure offers the clearest example of a strategy that is reshaping regional thinking: partnership is the new build.

Rather than attempting to construct a proprietary technology stack from the ground up, G42 partnered strategically with Microsoft Azure and Cerebras. This hybrid model allows them to leverage Microsoft's trusted platform and established tooling while deploying within sovereign UAE data centers that satisfy local residency requirements.

The Stargate supercomputer project demonstrates the scale of ambition this model enables. By combining hyperscaler expertise with sovereign deployment, G42 can pursue AI capabilities that would be impossible through either approach alone.

Lesson for Enterprise Leaders: Do not build alone. Sovereign cloud partnerships through models like Oracle Alloy or Google Distributed Cloud provide hyperscale technology with local control. The build-versus-buy calculation has shifted decisively toward partnership models for organizations that lack the capital and expertise to construct purpose-built AI facilities independently.

NEOM (Saudi Arabia) — Modular, Zero-Liquid-Discharge, AI-First Infrastructure

NEOM represents the most ambitious greenfield AI infrastructure project in the world—a cognitive city requiring massive edge compute and near-zero latency across an entirely new urban environment.

The project's infrastructure approach prioritizes modularity above traditional construction methods. Pre-fabricated data center units allow capacity deployment 50% faster than conventional concrete builds. This speed advantage compounds when scaled across multiple deployment sites.

Sustainability constraints have driven innovation in cooling architecture. NEOM's Zero-Liquid-Discharge policy prohibits water-intensive cooling solutions, accelerating adoption of advanced dielectric cooling systems that exceed current industry standards.

The renewable-only power strategy—combining solar, wind, and hydrogen—provides a template for AI infrastructure that operates entirely independent of fossil fuel dependencies. The initial 12MW pilot is designed to scale to hundreds of megawatts powered exclusively by clean energy.

Lesson for Enterprise Leaders: Modularity solves the speed versus resilience trade-off. For remote locations or rapid-scaling requirements, modular data centers cut deployment timelines dramatically while maintaining operational standards. Organizations planning for growth should evaluate prefabricated solutions before committing to traditional construction timelines.

UAE Smart-City & Telco Deployments (Generalized Story)

A major UAE smart-city initiative provides instructive lessons in distributed architecture design. The project's requirements—sub-20ms latency for autonomous vehicle coordination and real-time sensor processing—could not be satisfied by centralized cloud infrastructure alone.

The solution combined metro-edge deployments with central campus resources. Small-footprint edge facilities distributed throughout the urban environment handle latency-sensitive workloads, while larger centralized facilities manage training, batch processing, and data aggregation.

This hybrid topology has become the emerging standard for smart-city and IoT-intensive deployments across the region. The UAE's 95% 5G coverage creates the connectivity fabric necessary for seamless workload distribution between edge and core facilities.

Lesson for Enterprise Leaders: Plan for metro-edge plus central campus synergy. Single-site architectures cannot satisfy the latency requirements of next-generation applications. Infrastructure planning must account for distributed deployment from the design phase.

The Sovereign-Scale Maturity Model (Framework)

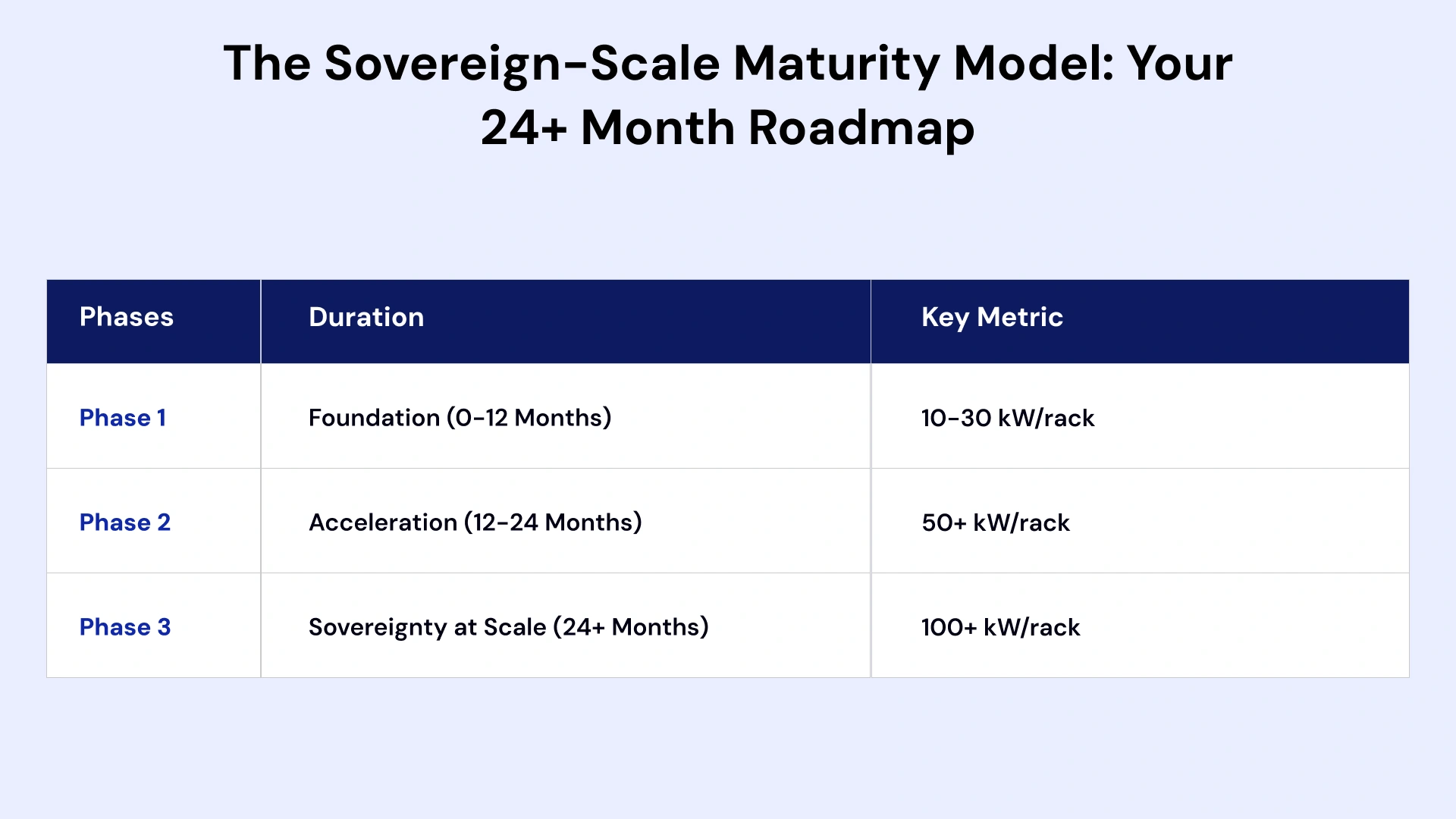

Infrastructure transformation does not happen overnight. The following phased approach provides a roadmap for CXOs planning AI-ready data center expansion in the GCC—balancing immediate operational needs with long-term strategic positioning.

Phase 1 — Foundation (0–12 Months)

The foundation phase establishes the groundwork for scalable AI infrastructure without requiring massive capital commitments. The focus is on quick wins that create optionality for future expansion.

Cooling Strategy: Implement Rear Door Heat Exchangers for existing mixed workloads. These retrofit solutions support rack densities of 10–30kW without requiring facility-wide redesign—bridging current operations while planning for higher-density future deployments.

Data Architecture: Complete data classification across open, confidential, and top-secret tiers. This exercise determines which workloads can leverage public cloud, which require sovereign hosting, and which demand air-gapped environments.

Sourcing: Lock in GPU supply through cloud provider OpEx models. Given 18+ month lead times for hardware procurement, cloud-based access to AI accelerators provides immediate compute capacity while long-term sourcing strategies mature.

Phase 2 — Acceleration (12–24 Months)

The acceleration phase transitions from tactical adaptations to strategic infrastructure investments. This is where organizations build differentiated AI compute capabilities.

Cooling Strategy: Deploy Direct-to-Chip cooling loops for high-density GPU racks supporting 50kW+ workloads. This targeted approach applies liquid cooling where it delivers maximum benefit without requiring facility-wide conversion.

Power Strategy: Execute Power Purchase Agreements for renewable energy supply. Solar integration is particularly attractive given regional irradiance levels, with PPAs providing price certainty while advancing sustainability objectives.

Data Architecture: Implement hybrid cloud architecture combining public cloud for open workloads with colocation or sovereign cloud for confidential data. This multi-zone approach satisfies regulatory requirements while maintaining operational flexibility.

Phase 3 — Sovereignty at Scale (24+ Months)

The sovereignty-at-scale phase positions organizations for leadership in regional AI capability. Investments at this stage create durable competitive advantages.

Cooling Strategy: Pilot full immersion cooling for next-generation AI training clusters supporting 100kW+ rack densities. These deployments prepare for GPU architectures that exceed current thermal management capabilities.

Power Strategy: Invest in on-site generation through hydrogen fuel cells or Small Modular Reactors. Grid-interactive UPS systems can also provide demand response capabilities that generate revenue while ensuring resilience.

Sourcing: Establish strategic OEM partnerships for priority hardware supply. Direct relationships with NVIDIA, AMD, and leading server manufacturers ensure access to next-generation silicon ahead of general availability.

How do data centers prepare for 100kW+ rack densities?

Organizations prepare through phased adoption of liquid cooling technologies, beginning with rear-door heat exchangers and progressing to direct-to-chip and full immersion systems as density requirements increase.

What is the best approach for hybrid AI infrastructure in the GCC?

The recommended approach combines public cloud for non-sensitive workloads with sovereign cloud zones for regulated data, enabling regulatory compliance while maintaining operational agility and cost efficiency.

Key Challenges for Gulf Enterprises — And Proven Solutions

Enterprise infrastructure leaders across the GCC face common obstacles when scaling AI-ready data center capacity. The following solutions draw from regional mega-project implementations and represent validated approaches to persistent challenges.

Challenge | The Problem | Proven Solution |

|---|---|---|

| Speed vs Resilience | Tier IV facilities require 24-36 months; business needs AI capacity immediately | Modular Data Centers: Deploy prefab pods in 6-9 months using Shell & Core strategy |

| Vendor Lock-in | Reliance on single US hyperscaler risks sovereignty and creates pricing exposure | Multi-cloud with Sovereign Exit: Kubernetes and open standards ensure workload portability |

| Skill Shortage | Shortage of advanced semiconductor and AI infrastructure talent in region | Managed Services + AI Academies: Partner with MSPs; invest in local upskilling programs |

| Extreme Heat | PUE spikes in summer; water cooling restricted or banned in many zones | Closed-Loop Liquid Cooling: Dielectric fluids; design for ASHRAE A3/A4 standards |

What's Next? 2025–2030 Trends GCC CXOs Must Plan For

Infrastructure decisions made today must account for technological and regulatory shifts that will reshape the regional landscape over the next five years. Three trends warrant particular attention from strategic planners.

SMR-Powered Data Campuses

Small Modular Reactors are moving from concept to serious consideration for gigawatt-scale data center power. Both Saudi Arabia and the UAE are exploring nuclear-backed baseload generation specifically designed for AI infrastructure campuses.

SMRs offer compelling advantages for data center applications: consistent baseload power independent of weather or grid constraints, compact footprints suitable for on-site deployment, and carbon-free generation aligned with sustainability commitments. Expect formal announcements of SMR-data center integration projects within the next 24-36 months.

Data Embassies & Virtual Zones

Saudi Arabia is pioneering regulatory frameworks that create "virtual zones" where foreign data laws apply within specific physical boundaries. These Data Embassies would allow global technology companies to host sensitive data within the Kingdom without facing local government access requirements for certain data categories.

This innovation represents a potential breakthrough for foreign direct investment in regional AI infrastructure. International enterprises currently hesitant to localize operations due to data access concerns may find these frameworks provide sufficient legal comfort to proceed with regional deployments.

Metro Edge + 5G AI Compute Explosion

The UAE's 95% 5G coverage creates infrastructure conditions for explosive growth in edge AI applications. Sub-20ms latency requirements for autonomous transport, industrial automation, and smart city sensors will drive compute from centralized cloud regions to distributed metro-edge deployments.

Infrastructure leaders should anticipate that edge compute capacity requirements will grow faster than central facility demand over the next five years. Investment in edge-capable architectures—or partnerships with edge infrastructure providers—positions organizations for this shift.

Myths CXOs Still Believe — And the Facts

Persistent misconceptions continue to shape infrastructure decisions in ways that increase cost and risk. Addressing these directly enables more effective strategic planning.

Myth: "We should build our own data center to save money."

Myth: "Data sovereignty means all data must stay in the country."

Fact: Only classified and sensitive data categories require physical localization. Most commercial data can flow across borders under appropriate contractual frameworks. Comprehensive data classification is the essential first step before committing to infrastructure investments—many organizations over-invest in sovereign capacity based on incomplete understanding of actual regulatory requirements.

Decision Guide — Which Data Center Strategy Fits Your Roadmap?

Infrastructure strategy must align with organizational priorities. The following decision framework helps leaders identify the approach best suited to their specific constraints and objectives.

If Speed Is Priority → Choose Modular / Prefab

Organizations facing immediate capacity constraints should prioritize modular data center solutions. Prefabricated units deploy in 6-9 months versus 24-36 months for traditional construction. Shell and Core strategies allow immediate operations in provisional facilities while permanent infrastructure is built.

If Sovereignty Is Priority → Hybrid + Sovereign Cloud Zones

Organizations with strict data residency requirements should implement hybrid architectures combining public cloud for non-sensitive workloads with certified sovereign cloud zones for regulated data. This approach satisfies compliance requirements while avoiding the operational overhead of fully air-gapped environments for data that does not require such protection.

If Power Is the Constraint → On-site Renewable + PPA Strategy

Organizations in locations with constrained grid access should pursue on-site renewable generation combined with Power Purchase Agreements for supplementary supply. Solar integration is particularly attractive given GCC irradiance levels, while hydrogen and SMR options provide baseload reliability for critical workloads.

If AI Training Is the Core → Liquid-Cooled AI Factory Build

Organizations with substantial AI training workloads should invest in purpose-built liquid-cooled facilities designed from the ground up for 50-100kW+ rack densities. These AI Factories require different architectural approaches than general-purpose data centers—attempting to retrofit existing facilities typically results in compromised performance and higher long-term costs.

Conclusion — GCC Is Redefining What "Scalable" Means

The data center infrastructure being built across Saudi Arabia and the UAE is not simply larger than what came before—it is fundamentally different. AI Factories rather than general-purpose facilities. Liquid cooling rather than air. Sovereign architectures rather than public cloud extensions. Independent power rather than grid dependency.

For infrastructure leaders across the region, the strategic imperative is clear. The organizations that will lead in AI capability are those that master the Heat-Power-Law trilemma today. They are signing power agreements now for capacity needed in 2028. They are classifying data to optimize sovereign versus public deployment. They are building partnerships with hyperscalers that provide technology access with local control.

The next decade of AI innovation in the Gulf will be built on infrastructure decisions made in the next 24 months. The patterns emerging from regional mega-projects provide a roadmap. The question for every CIO, CTO, and infrastructure leader is whether their organization will build for the AI of tomorrow—or remain constrained by the physics and policies of yesterday.

Shivisha Patel

Shivisha Patel