The global behavioral health landscape is facing a paradox. While awareness and demand for mental health services have reached historic highs, the supply of clinicians remains critically low. The World Health Organization (WHO) estimates a global shortage of mental health professionals, leaving millions without timely access to care. This supply-demand gap has created an urgent imperative for innovation.

Enter Artificial Intelligence (AI) for mental healthcare—a technological shift that promises not to replace clinicians, but to extend their reach, enhance diagnostic precision, and democratize access to care.

These statistics signal a tipping point: the industry is moving from "what if" to "how now" when it comes to artificial intelligence-based mental health solutions. This blog explores the current state of AI in mental healthcare, from patient-facing apps to provider-focused clinical decision support, while navigating the complex ethical landscape that defines this frontier.

What is AI in Mental Health?

At its core, it refers to the use of machine learning (ML), natural language processing (NLP), and predictive analytics to simulate human intelligence in the context of behavioral health. Unlike static software, these systems learn from data—analyzing patterns in speech, text, and electronic health records (EHRs) to identify markers of distress that might escape the human eye.

How AI Can Transform Mental Health Care

The transformative power of AI development services lies in their ability to process vast amounts of unstructured data. In traditional workflows, a therapist relies on patient self-reports during a 50-minute session. AI, however, can provide continuous, passive monitoring or analyze years of clinical notes in seconds to flag risk factors.

It is crucial to frame this correctly when discussing AI vs traditional therapy: a comparison. The role of AI healthcare is not a replacement for the therapeutic alliance—the human connection remains the strongest predictor of clinical success. Instead, AI acts as a force multiplier, handling data synthesis, screening, and routine engagement so clinicians can focus on complex care.

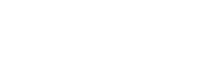

Key Applications of AI in Mental Healthcare

The ecosystem of mental health AI solutions for providers and patients is rapidly expanding. We can categorize these applications into two broad streams: patient-facing tools and provider-enablement technologies.

1. AI Mental Health Chatbots and Apps

The most visible application is the AI mental health app or chatbot. Powered by NLP, these tools offer 24/7 on-demand support.

- Cognitive Behavioral Therapy (CBT) Bots: Advanced chatbots use structured conversations to guide users through CBT exercises, helping them challenge negative thought patterns.

- Crisis Triage: An AI mental health chatbot can detect keywords related to self-harm or suicide and immediately escalate the interaction to a human crisis counselor.

- Just-in-Time Adaptive Interventions (JITAIs): These systems push personalized interventions to patients' smartphones exactly when they are most needed (e.g., detecting high stress via heart rate variability and suggesting a breathing exercise).

2. AI-Based Mental Health Screening and Assessment

Subjectivity is a longstanding challenge in psychiatry. AI-based mental health screening aims to introduce objective biomarkers into the diagnostic process.

- Vocal Biomarkers: Algorithms can analyze pitch, tone, and speech rate to detect early signs of depression or anxiety, often before the patient is consciously aware of them.

- Digital Phenotyping: By analyzing smartphone data (typing speed, sleep patterns, social activity), AI mental health assessment tools can predict manic episodes in bipolar disorder or relapse in schizophrenia.

- AI for Depression & Anxiety Screening: Automated tools can screen patient populations at scale, flagging high-risk individuals for immediate clinical practices with AI.

3. Clinical Decision Support and Predictive Analytics

For behavioral health clinics, AI mental health data analytics are becoming indispensable.

- Pharmacogenomics & Precision Medicine: AI analyzes genetic markers alongside patient history to predict which antidepressants or antipsychotics are most likely to work, reducing the "trial and error" period of medication management.

- Suicide Risk Prediction: Advanced algorithms analyze unstructured notes and historical data to identify patients with a high risk of self-harm, alerting clinicians to intervene proactively.

- Treatment Optimization: AI-driven therapy tools can suggest personalized treatment pathways based on how similar patient profiles have responded to specific interventions in the past.

4. Remote Patient Monitoring (RPM) and VR Integration

AI for remote mental health support allows for continuous care outside the clinic.

- AI-Assisted Patient Mental Health Tracking: Systems monitor adherence to digital therapeutics and alert providers if a patient’s condition deteriorates.

- VR Exposure Therapy: AI-driven Virtual Reality scenarios dynamically adjust anxiety-inducing stimuli (e.g., for PTSD or phobias) based on the patient's real-time physiological response (biofeedback), ensuring therapy is challenging but safe.

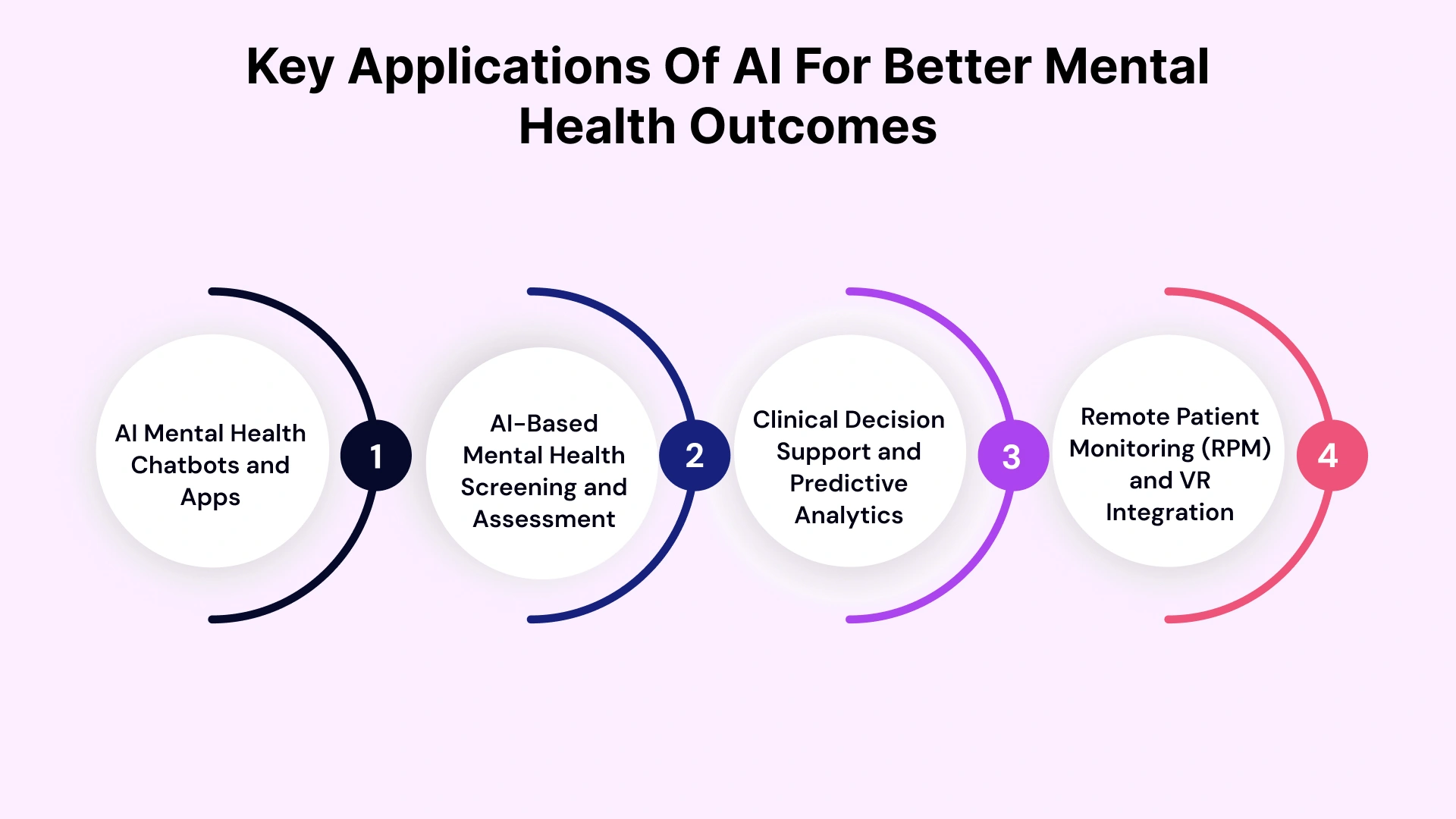

Benefits of AI for Behavioral Health Clinics

For healthcare executives and clinic owners, the benefits of AI for behavioral health clinics extend beyond clinical outcomes to operational sustainability.

1. Operational ROI and Efficiency

Time savings primarily drive the AI mental health ROI for clinics. By automating intake, triage, and documentation, clinics can increase patient throughput without compromising care quality.

- Reduced Administrative Burden: Ambient AI scribes can save clinicians 2–3 hours per day by listening to sessions (with consent) and auto-generating SOAP notes, directly combating the burnout crisis.

- Optimized Revenue Cycle Management: AI tools can audit claims before submission, predict denials based on payer history, and suggest code corrections to accelerate reimbursement.

2. Enhanced Scalability and Access

AI-based mental health startup solutions enable organizations to scale services rapidly. An AI mental health platform for startups can serve thousands of users simultaneously through automated triage, ensuring that human therapists see only patients who genuinely need high-acuity care.

3. Value-Based Care Alignment

As payment models shift from fee-for-service to value-based care, clinics must prove patient improvement. AI mental health tools for therapists provide the objective data (outcomes tracking, engagement metrics) needed to demonstrate efficacy to payers and secure higher reimbursement rates.

4. Staff Retention and Satisfaction

By removing the drudgery of paperwork and scheduling, AI mental health software allows clinicians to practice at the top of their license. This reduction in administrative fatigue is a powerful tool for retaining top talent in a competitive labor market.

5. Proactive Risk Mitigation and Patient Safety

AI offers a crucial layer of safety by continuously monitoring data for potential crisis indicators. Machine learning models analyze patient engagement patterns, text sentiment, and physiological data to predict acute episodes, self-harm risk, or treatment dropout days or weeks in advance. This allows clinicians to perform just-in-time interventions, significantly improving safety and reducing the clinic's liability.

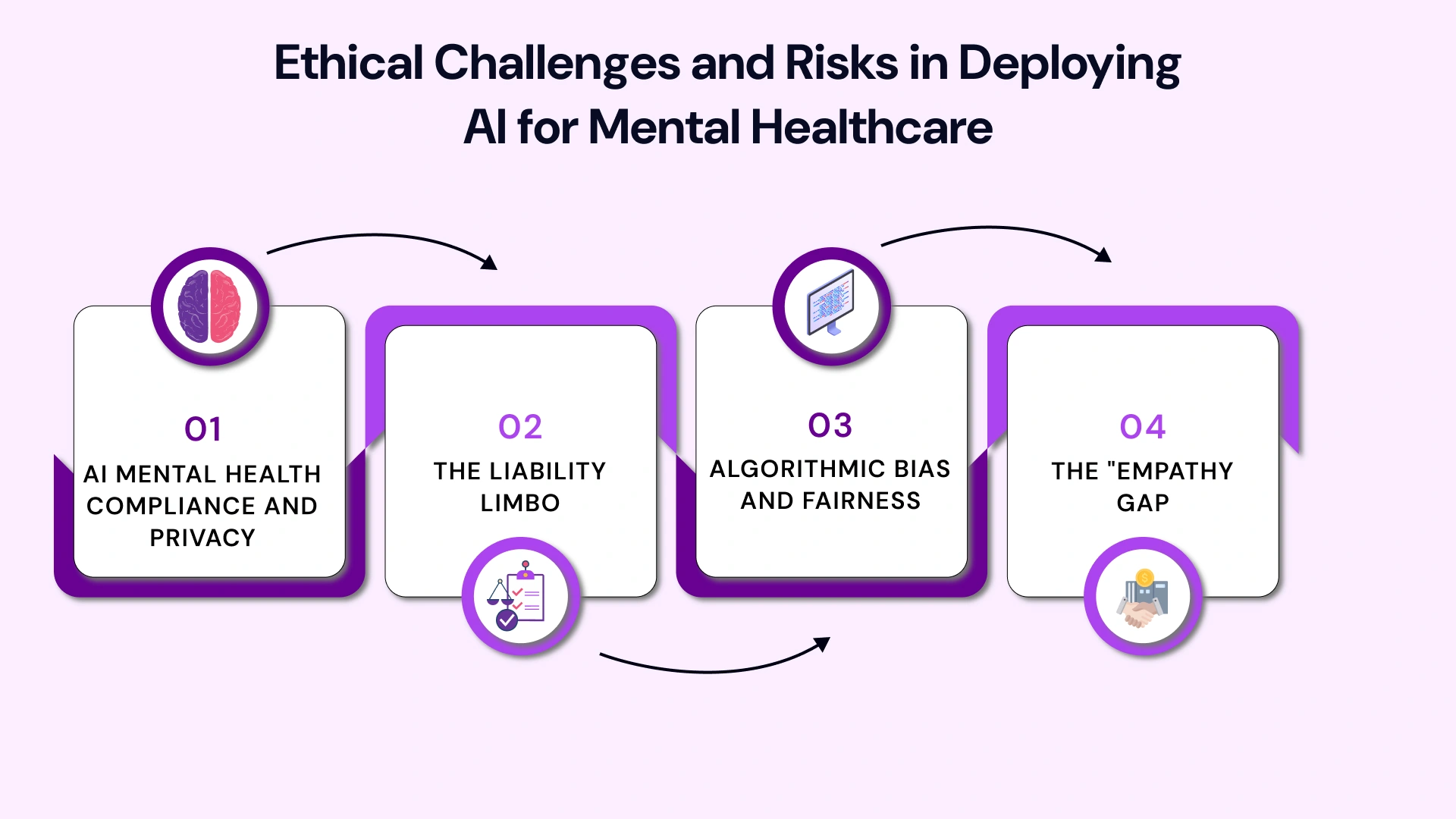

Challenges and Risks: Navigating the Ethical Frontier

Despite the promise, implementing AI in mental health practice is complex. Leaders must proactively address the challenges of AI adoption for mental health.

1. AI Mental Health Compliance and Privacy

Mental health data is exceptionally sensitive. AI mental health compliance and privacy are non-negotiable.

- HIPAA & GDPR: Any AI mental health software must be rigorously compliant, ensuring data encryption at rest and in transit.

- Data Sovereignty: There are concerns regarding who owns the data generated during interactions with AI mental health services for healthcare providers.

- LSI Note: AI mental health and patient privacy laws are evolving, and organizations must stay ahead of regulatory changes to avoid massive fines.

2. The Liability Limbo

If an AI tool fails to flag a suicidal patient, or conversely, flags a false positive that leads to involuntary commitment, who is liable? The developer? The clinician? The clinic? The legal framework for AI mental health use cases for clinics is still being written, creating a "liability limbo" that requires robust insurance and indemnification strategies.

3. Algorithmic Bias and Fairness

If an AI model is trained predominantly on data from a single demographic, it may fail to diagnose or treat individuals from underrepresented groups accurately. Ensuring diversity in training datasets is a critical ethical obligation to prevent automating inequality.

4. The "Empathy Gap"

Ethical considerations of AI in behavioral health include the risk of over-reliance. Machines cannot replicate human empathy. There is a risk that patients interacting primarily with bots may feel isolated. There must always be a "human in the loop" for high-stakes decisions and emotional validation.

Future Trends: AI-Augmented Mental Healthcare in the 2030s

The intersection of AI development and behavioral health is poised for transformative growth, with the global market for AI-powered mental health solutions projected to more than quadruple by 2030. The future will be defined by precision, accessibility, and the seamless integration of technology into every phase of the care continuum.

Precision Digital Therapeutics (PDTs) and Personalized Treatment

The shift from generalized care to highly personalized, preventive models will accelerate.

- Adaptive Therapeutics: Expect a significant increase in Prescription Digital Therapeutics (PDTs)—FDA-cleared software prescribed by clinicians. AI will move beyond simple delivery to personalize therapeutic modules in real time, adjusting interventions such as Cognitive Behavioral Therapy (CBT) based on a user's current mood, progress, and behavioral patterns.

- Treatment Response Prediction: Advanced Machine Learning (ML) models will increasingly use multi-modal data (genetic profiles, clinical histories, brain imaging, digital phenotyping) to predict a patient's likely response to specific medications or psychotherapies before treatment even begins, significantly reducing the trial-and-error period.

Seamless Integration with Wearables and Biosensors

AI will move beyond analyzing text to understanding the body's subtle, continuous signals, enabling real-time risk detection and "just-in-time" intervention.

Passive Biometric Monitoring: AI mental health assessment tools will pull real-time bio-data from consumer wearables and specialized medical sensors. This includes subtle cues like:

- Electrodermal Activity (EDA): Stress levels detected via sweat sensors.

- Heart Rate Variability (HRV) and ECG: Insights into emotional regulation and stress-related conditions.

- Voice and Speech Analysis: Identifying vocal biomarkers (pitch, tempo, cadence) linked to anxiety, depression, or cognitive decline with high accuracy.

Ambient Intelligence: The goal is a connected care ecosystem in which ambient intelligence from passive sensors provides a holistic, continuous view of the patient's psychological state, enabling clinicians to receive alerts up to several days before a human could recognize a crisis.

Generative AI for Clinical Augmentation and Training

Generative AI (GenAI), like Large Language Models (LLMs), will redefine both the administrative and educational aspects of mental healthcare.

- Hyper-Realistic Clinician Training: Therapists and trainees will practice handling rare, dangerous, or sensitive clinical scenarios against hyper-realistic AI patient avatars. These generative agents can be programmed with specific backgrounds, identities, and conditions, offering a safe, repeatable, and standardized training environment.

- Administrative Efficiency: AI scribes and summarization tools will move from simple transcription to generating highly structured, compliant, and succinct clinical documentation (e.g., SOAP notes) immediately after a session, dramatically reducing the administrative burden on clinicians.

Custom Development and Data Sovereignty

The focus for clinics will shift toward bespoke, localized solutions.

- Bespoke AI Models: Demand for custom healthcare software development will rise as clinics and large systems seek to build AI models tailored to their unique patient populations, regional data, and ethical guidelines, rather than relying on generic, off-the-shelf tools.

- Secure Cloud Deployment: To meet strict data sovereignty and privacy regulations (such as HIPAA and the EU's AI Act), advanced transformer models will increasingly run within virtual private clouds (VPCs) and hybrid architectures, ensuring patient data remains localized and secure.

Leverage VLink Healthcare Software Development Services for AI Integration

For organizations looking to bridge the gap between concept and clinical reality, partnering with a specialized technical partner is essential. Off-the-shelf tools often fail to meet the nuanced needs of behavioral health workflows. This is where VLink's Healthcare Software Development Services excel.

Our Expertise:-

- Custom AI Model Development: VLink specializes in building bespoke AI mental health solutions for providers, trained on your specific dataset to achieve higher accuracy and relevance than generic models.

- HIPAA-Compliant Engineering: Security is embedded into the code from day one, not added as an afterthought. VLink ensures your AI mental health platform for startups or clinics meets the strictest compliance standards (HIPAA, GDPR, SOC2).

- Seamless EHR Integration: One of the biggest hurdles is getting AI to talk to your existing Electronic Health Record system. VLink’s engineers are experts in HL7 and FHIR standards, allowing for the creation of AI mental health tools for therapists that integrate directly into their existing dashboards without disrupting workflows.

- Digital Twins & Predictive Modeling: Our dedicated team leverages advanced "Digital Twin" technology to simulate patient treatment responses, enabling risk-free scenario planning and AI-driven mental health data analytics to drive decision-making.

Conclusion

Artificial intelligence mental health solutions represent the most significant shift in behavioral healthcare in decades. From AI mental health chatbots offering immediate solace to AI mental health data analytics predicting a crisis before it strikes, the technology is reshaping the continuum of care.

For providers and innovators, the path forward involves a balanced approach: embracing the pros and cons of AI for mental health, investing in robust AI mental health software, and maintaining a steadfast commitment to ethical standards.

As the sector evolves, partnering with experienced technology specialists becomes crucial. Whether you are building an AI mental health platform for startups or integrating AI mental health solutions for providers, the right technical foundation is the difference between a novelty and a clinical breakthrough.

Ready to turn AI potential into a clinical reality? Our team provides the strategic and technical expertise needed to navigate the complexities of AI development in mental healthcare, ensuring compliance, efficacy, and ethical design. Contact us now and secure your future in behavioral health innovation. We are ready to build.

Shivisha Patel

Shivisha Patel